Updated almost 11 years ago by Martin Pierrick

The central GammaLib repo will be accessed using the https protocol, which makes use of SSL certificates. To disable certificate verification, we recommend to issue the command

$ git config --global http.sslverify "false"which disables certificate verification globally.

If you want to avoid typing your user name and password every time you make a push, you can create a file called .netrc under your root directory (i.e. ~/.netrc) with the following content:

machine cta-git.irap.omp.eu login <user> password <password>(replace

<user> and <password> by your user name and password). Once the .netrc file in place, you may simply type$ git pushto push you code changes into the repository.

Note: There seems to be a problem with git version 1.7.11.3, which does not allow pushing a new branch in the central repository or even cloning an existing project (at least when used from a Mac with OS X 10.6.8). No password is requested when it should be, and the following message appears:

error: RPC failed; result=22, HTTP code = 401 fatal: The remote end hung up unexpectedly

Updated over 10 years ago by Knödlseder Jürgen

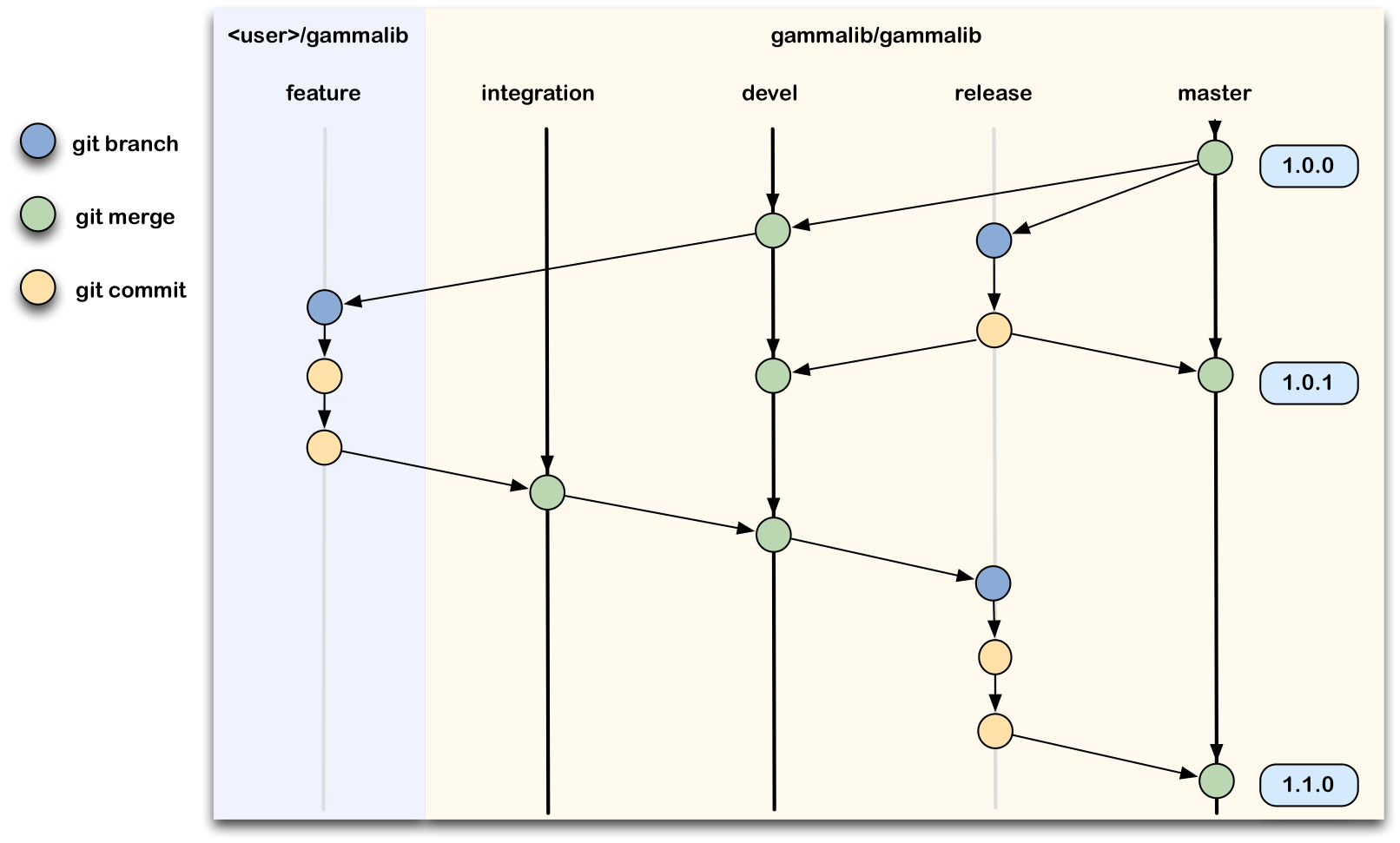

This section gives a summary of the workflow. Details are given for each of these steps in the following sections.

devel branch is the trunk.devel branch, and start a new feature branch from that.536-refactor-database-code.devel or any other branches into your feature branch while you are working.devel, consider Rebasing on devel.This way of working helps to keep work well organized, with readable history.

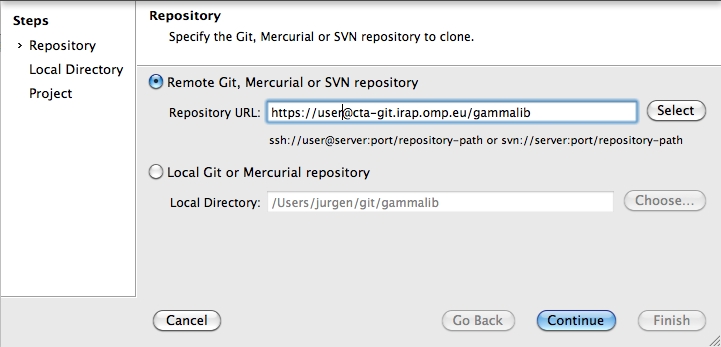

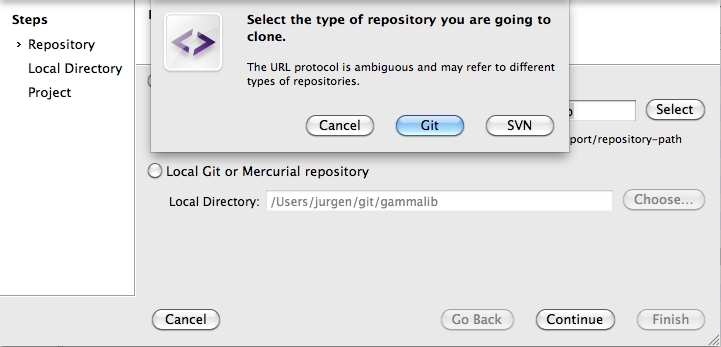

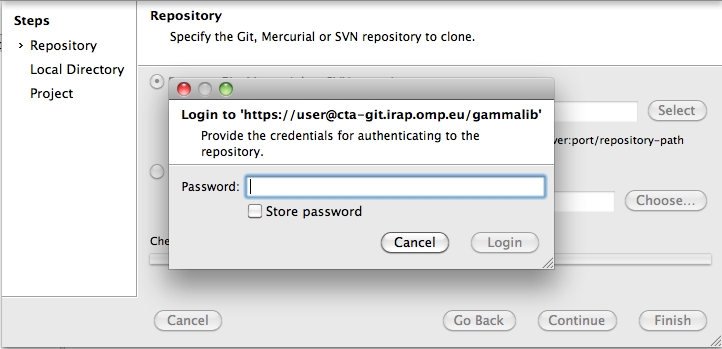

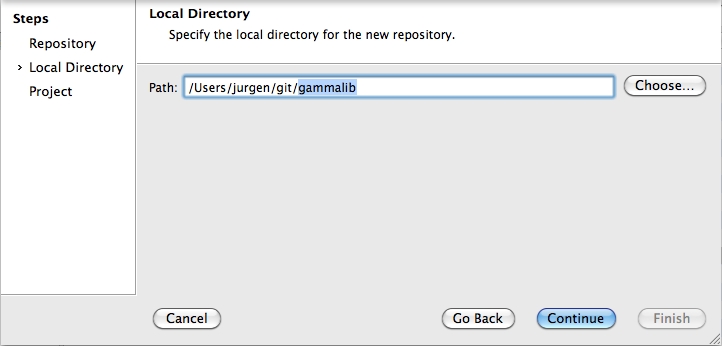

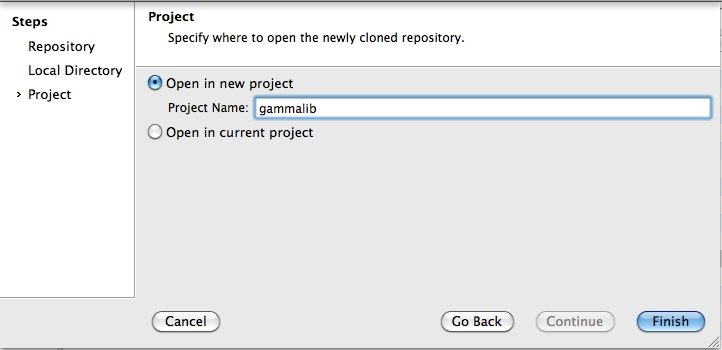

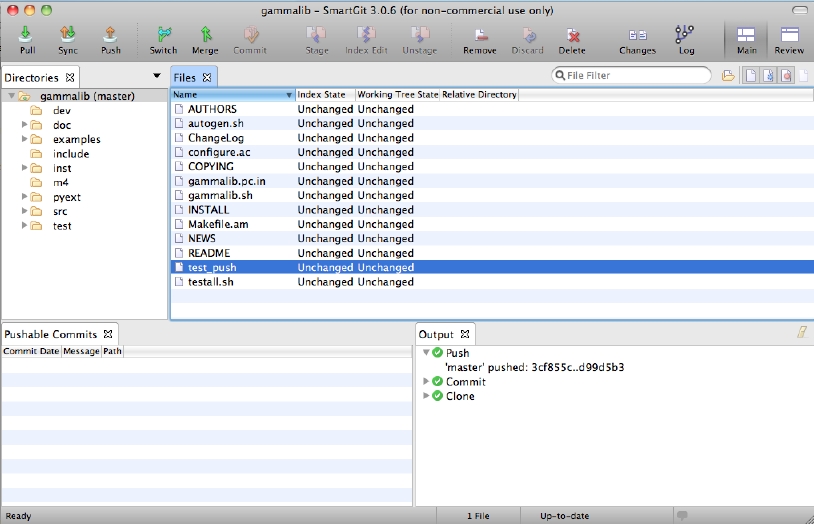

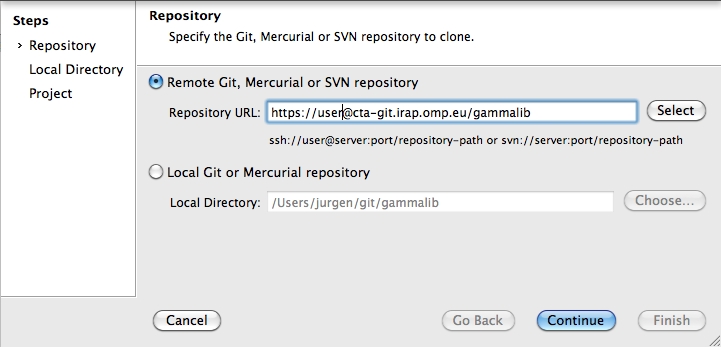

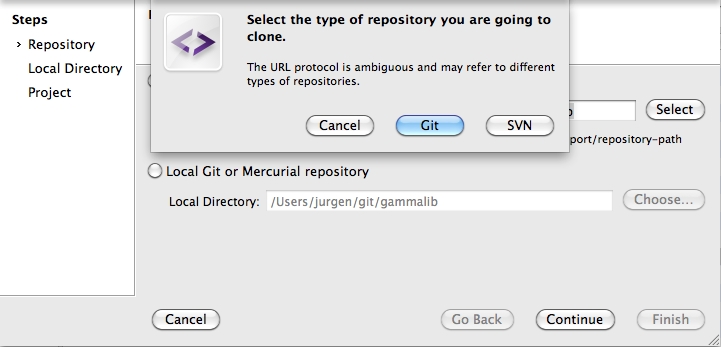

To clone the GammaLib source code, type

$ git clone https://user@cta-git.irap.omp.eu/gammalib

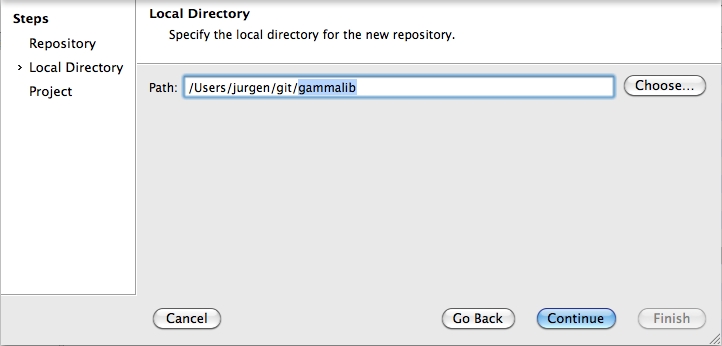

This will create a directory called ctools under the current working directory that will contain the ctools source code.

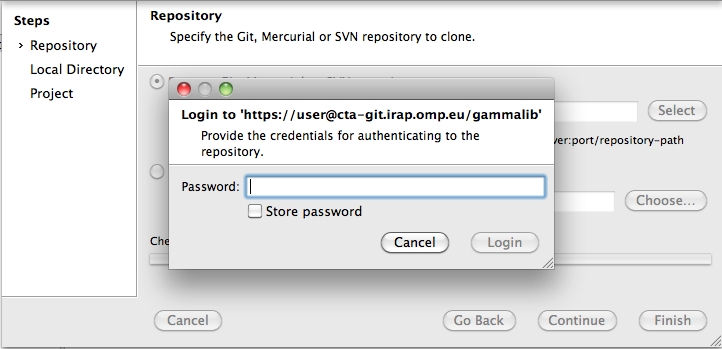

This will create a directory called gammalib under the current working directory that will contain the GammaLib source code. Here, user is your user name on the CTA collaborative platform at IRAP (the same you used to connect for reading this text). Executing this command will open a window that asks for a password (your password on the CTA collaborative platform at IRAP), and after confirming the password, your will get a clone of gammalib on your disk.

Note that the default branch from which you should start for software developments is the devel branch, and the git clone command normally automatically clone this branch from the central repository. Make sure that you will never use the master branch to start your software developments, as pushing to the master branch is not permitted. The master branch always contains the code of the last GammaLib release, and serves as seed for hotfixes. To know which branch you’re on, type

$ git status

devel, switch to the devel branch using$ git checkout devel

When you are ready to make some changes to the code, you should start a new branch. We call this new branch a feature branch.

The name of a feature branch should always start with the Redmine issue number, followed by a short informative name that reminds yourself and the rest of us what the changes in the branch are for. For example 735-add-ability-to-fly, or 123-bugfix. If you don’t find a Redmine issue for your feature, create one.

First make sure that you start from the devel branch by typing

$ git checkout develThen create a new feature branch using

$ git checkout -b 007-my-new-feature Switched to a new branch '007-my-new-feature'This creates and switches automatically to the branch

007-my-new-feature.

Now you’re ready to write some code and commit your changes. Suppose you edited the pyext/GFunction.i file. After editing, you can check the status by typing

$ git status # On branch 007-my-new-feature # Changes not staged for commit: # (use "git add <file>..." to update what will be committed) # (use "git checkout -- <file>..." to discard changes in working directory) # # modified: pyext/GFunction.i # no changes added to commit (use "git add" and/or "git commit -a")Git signals here that you have to add and commit your change.

You’ll do this by typing

$ git add pyext/GFunction.i $ git commit -am 'Remove GTools.hpp include.' [007-my-new-feature 065af59] Remove GTools.hpp include. 1 files changed, 0 insertions(+), 1 deletions(-)

Continue with editing and commit all your changes.

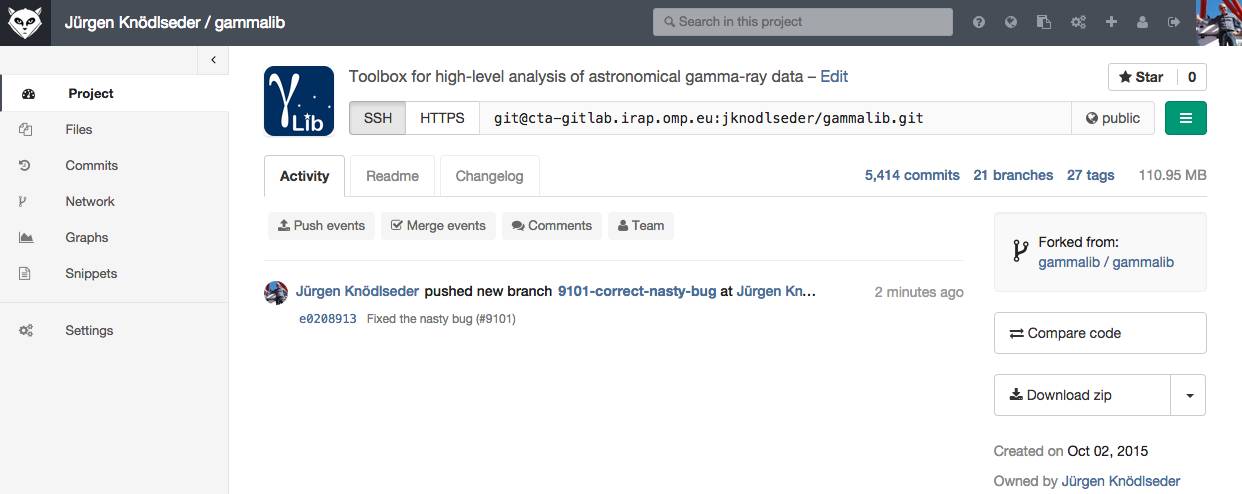

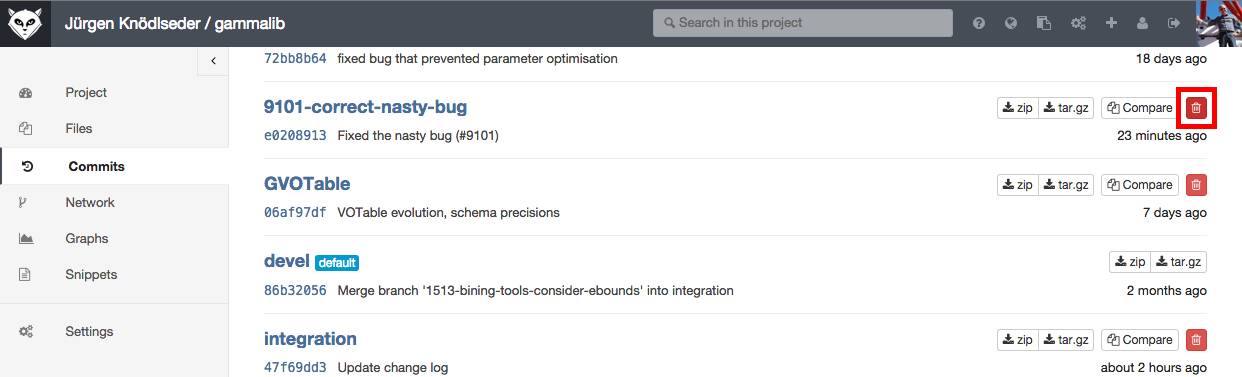

To push your new feature branch into the IRAP git repository, type

$ git push origin 007-my-new-feature Password: Counting objects: 7, done. Delta compression using up to 48 threads. Compressing objects: 100% (4/4), done. Writing objects: 100% (4/4), 390 bytes, done. Total 4 (delta 3), reused 0 (delta 0) remote: To https://github.com/gammalib/gammalib.git remote: 8139b97..07946a3 github/integration -> github/integration remote: * [new branch] 007-my-new-feature -> 007-my-new-feature To https://jknodlseder@cta-git.irap.omp.eu/gammalib * [new branch] 007-my-new-feature -> 007-my-new-featureThis pushed your modifications into the repository. Note that this created a new branch in the repository.

Eventually, the devel branch has advanced while you developed the new feature. In this case, you should rebase your code before asking for merging. If you’re not sure what this means, better skip this step and leave it to the integration manager to work out how to deal with the diverged branches. If you feel however you want to help the manager with integration, rebase your branch using

$ git fetch origin Password: $ git pull origin 007-my-new-feature Password: From https://cta-git.irap.omp.eu/gammalib * branch 007-my-new-feature -> FETCH_HEAD Already up-to-date. $ git checkout 007-my-new-feature Already on '007-my-new-feature' Your branch and 'devel' have diverged, and have 1 and 1 different commit(s) each, respectively. $ git branch tmp 007-my-new-feature $ git rebase devel First, rewinding head to replay your work on top of it... Applying: Remove GTools.hpp include.Here we created a backup of

007-my-new-feature into tmp for safety.

If your feature branch is already in the central repository and you rebase, you will have to force push the branch; a normal push would give an error. Use this command to force-push:

$ git pull origin 007-my-new-feature

Password:

From https://cta-git.irap.omp.eu/gammalib

* branch 007-my-new-feature -> FETCH_HEAD

Merge made by the 'recursive' strategy.

$ git push -f origin 007-my-new-feature

Password:

Counting objects: 5, done.

Delta compression using up to 4 threads.

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 492 bytes, done.

Total 3 (delta 2), reused 0 (delta 0)

remote: To https://github.com/gammalib/gammalib.git

remote: 1f00150..20cf8c5 007-my-new-feature -> 007-my-new-feature

remote: 35d6408..1f00150 github/007-my-new-feature -> github/007-my-new-feature

To https://jknodlseder@cta-git.irap.omp.eu/gammalib

1f00150..20cf8c5 007-my-new-feature -> 007-my-new-feature

Note that this will overwrite the branch in the central repository, i.e. this is one of the few ways you can actually lose commits with git.

Note: I don’t really understand why I need the git pull before the push, but without I get the following error message:

$ git push -f origin 007-my-new-feature Password: Counting objects: 11, done. Delta compression using up to 4 threads. Compressing objects: 100% (7/7), done. Writing objects: 100% (7/7), 1.44 KiB, done. Total 7 (delta 6), reused 0 (delta 0) remote: error: denying non-fast-forward refs/heads/007-my-new-feature (you should pull first)

When all looks good you can delete your backup branch using

$ git branch -D tmp Deleted branch tmp (was 1f00150).

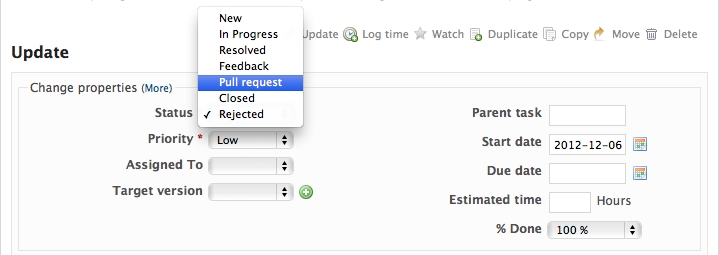

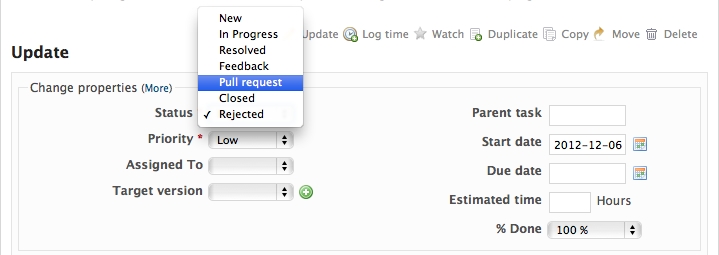

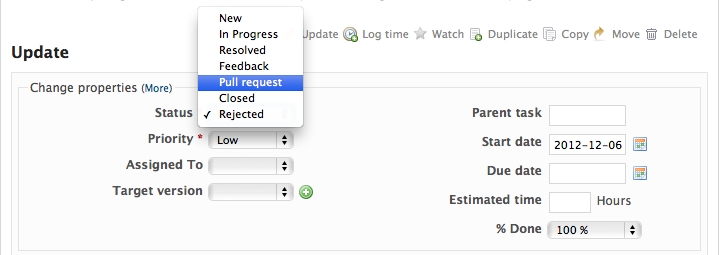

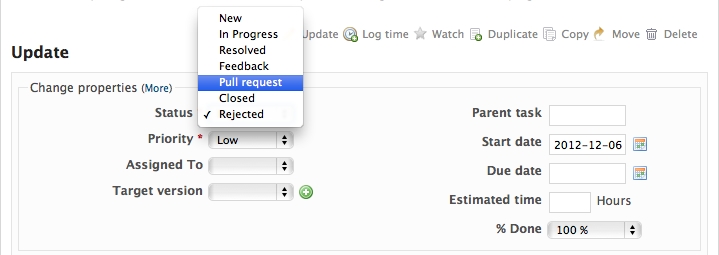

When you are ready to ask for someone to review your code and consider a merge, change the status of the issue you’re working on to Pull Request:

In the notes field, describe the set of changes, and put some explanation of what you’ve done. Say if there is anything you’d like particular attention for - like a complicated change or some code you are not happy with.

If you don’t think your request is ready to be merged, just say so in your pull request message. This is still a good way of getting some preliminary code review.

Updated over 11 years ago by Knödlseder Jürgen

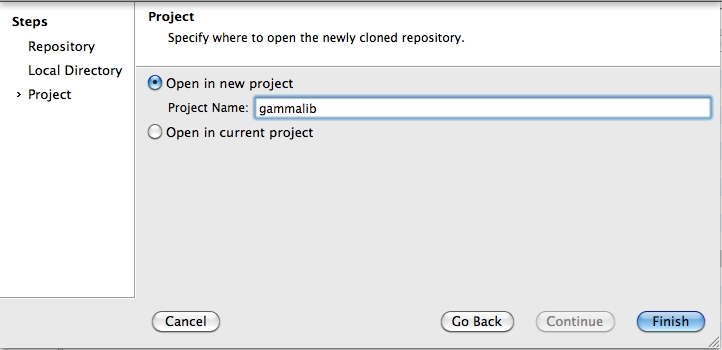

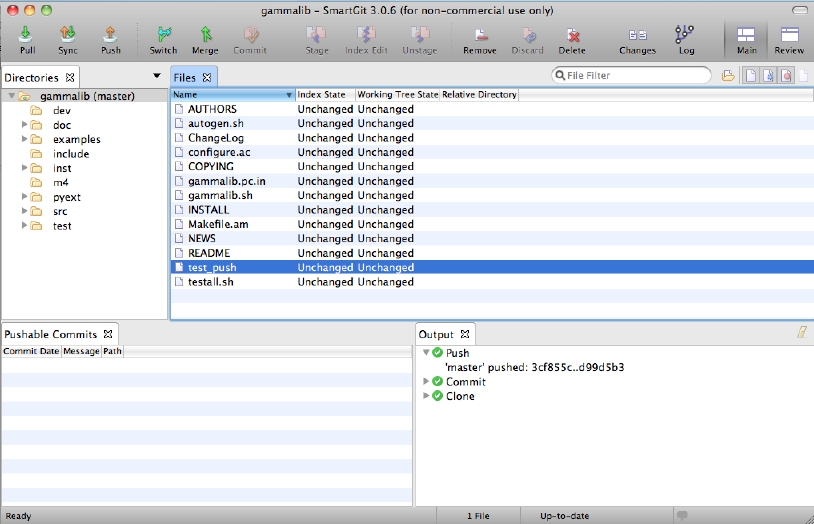

As first step, a clone of the central GammaLib repository is needed:

$ git clone https://manager@cta-git.irap.omp.eu/gammalib Cloning into 'gammalib'... Password: remote: Counting objects: 22150, done. remote: Compressing objects: 100% (7596/7596), done. remote: Total 22150 (delta 17330), reused 18491 (delta 14497) Receiving objects: 100% (22150/22150), 80.12 MiB | 192 KiB/s, done. Resolving deltas: 100% (17330/17330), done.where

manager is the user name of the integration manager.

If there are only a few commits, consider rebasing to upstream:

$ git checkout 007-my-new-feature $ git fetch origin $ git rebase origin/devel

If there are a longer series of related commits, consider a merge instead:

$ git checkout 007-my-new-feature $ git merge --no-ff origin/develNote the

--no-ff above. This forces git to make a merge commit, rather than doing a fast-forward, so that these set of commits branch off devel then rejoin the main history with a merge, rather than appearing to have been made directly on top of devel.

Now, in either case, you should check that the history is sensible and you have the right commits:

$ git log --oneline --graph $ git log -p origin/devel..The first line above just shows the history in a compact way, with a text representation of the history graph. The second line shows the log of commits excluding those that can be reached from

devel (origin/devel), and including those that can be reached from current HEAD (implied with the .. at the end). So, it shows the commits unique to this branch compared to devel. The -p option shows the diff for these commits in patch form.

Now it’s time to merge the feature branch in the integration branch:

$ git checkout integration

Branch integration set up to track remote branch integration from origin.

Switched to a new branch 'integration'

$ git merge 007-my-new-feature

Updating ccba491..623d38d

Fast-forward

.gitignore | 20 ++++++++---

pyext/GDerivative.i | 77 +++++++++++++++++++++++++++++++++++++++++++++

pyext/GFunction.i | 46 +++++++++++++++++++++++++++

pyext/gammalib/numerics.i | 2 +

4 files changed, 139 insertions(+), 6 deletions(-)

create mode 100644 pyext/GDerivative.i

create mode 100644 pyext/GFunction.i

$ git push origin integration

Password:

Counting objects: 7, done.

Delta compression using up to 4 threads.

Compressing objects: 100% (4/4), done.

Writing objects: 100% (4/4), 392 bytes, done.

Total 4 (delta 3), reused 0 (delta 0)

remote: To https://github.com/gammalib/gammalib.git

remote: ccba491..623d38d integration -> integration

remote: 065af59..8c6cc48 github/007-my-new-feature -> github/007-my-new-feature

To https://jknodlseder@cta-git.irap.omp.eu/gammalib

ccba491..623d38d integration -> integration

The push will automatically launch the integration pipeline on Jenkins.

You should verify the all checks are passed with success.

Once the new feature is validated, merge the feature in the devel branch:

$ git checkout devel

Switched to branch 'devel'

$ git merge integration

Updating cb9b2fe..623d38d

Fast-forward

pyext/GFunction.i | 1 -

1 files changed, 0 insertions(+), 1 deletions(-)

$ git push origin

Total 0 (delta 0), reused 0 (delta 0)

remote: To https://github.com/gammalib/gammalib.git

remote: cb9b2fe..623d38d devel -> devel

remote: ccba491..623d38d github/integration -> github/integration

To https://jknodlseder@cta-git.irap.omp.eu/gammalib

cb9b2fe..623d38d devel -> devel

$ git checkout devel Already on 'devel' $ git branch -D 007-my-new-feature Deleted branch 007-my-new-feature (was 816ac2c). $ git push origin :007-my-new-feature Password: remote: To https://github.com/gammalib/gammalib.git remote: - [deleted] 007-my-new-feature remote: cb9b2fe..623d38d github/devel -> github/devel To https://jknodlseder@cta-git.irap.omp.eu/gammalib - [deleted] 007-my-new-featureNote the colon : before

007-my-new-feature. See also: http://github.com/guides/remove-a-remote-branch

Updated over 11 years ago by Knödlseder Jürgen

The COMPTEL telescope aboard the CGRO satellite has been flown from 1991-2000, collecting an unprecedented set of data in the energy range from 750 keV up to 30 MeV. Some information about the COMPTEL telescope and access to the data can be found at the following link: http://heasarc.gsfc.nasa.gov/docs/cgro/comptel.

Although the COMPTEL database is very valuable, no software tools are publically available to exploit the COMPTEL data.

A description of the COMPTEL datasets can be found at http://wwwgro.unh.edu/comptel/comptel_datasets.html.

All COMPTEL data are available in FITS format. The following datasets are relevant for COMPTEL data analysis:Information about the processed data is available at http://wwwgro.unh.edu/comptel/compass/compass_users.html.

Here some further useful links concerning the data:The COMPTEL data were analysed using the COMPASS software that is not publically available.

Here some useful links:Updated almost 9 years ago by Knödlseder Jürgen

The first step in a code release is to branch off the actual code in devel and merge it into the release branch. This is done for an authorized code manager using the following sequence of commands:

git checkout release git merge devel

Before releasing the code, you have to modify a number of files to incorporate release information in the code distribution and to update the code version number. This may have been done before during the development. The command sequence for updating release information is:

git checkout release nano configure.ac AC_INIT([gammalib], [0.10.0], [jurgen.knodlseder@irap.omp.eu], [gammalib]) nano gammalib.pc.in Version: 0.10.0 nano ChangeLog ... (update the change log) ... nano NEWS ... (update the NEWS) ... nano README ... (update the README) ... nano doc/Doxyfile PROJECT_NUMBER = 0.10.0 nano doc/source/conf.py # The short X.Y version. version = '0.10' # The full version, including alpha/beta/rc tags. release = '0.10.0' git add * git commit -m "Set release information for release X.Y.Z." git push

The nano commands will open an editor (provided you have nano installed; otherwise use any other convenient editor). The lines following the nano command indicate which specific lines need to be changed. Changes in the files ChangeLog, NEWS and README need some more editing. Please take some time to write all important things done, as GammaLib users will later rely on this information for their work! ChangeLog and NEWS should have been updated while adding new features, but you probably need to adapt the version number and release date.

Use the release branch in the central repository to perform extended code testing. The code testing is done by the release pipeline in Jenkins. Make sure that this pipeline runs fully through before continuing. If errors occurred in the testing, correct them and run the pipeline again unit the pipeline ends with success. Don’t forget to push any changes into the repository.

In addition to the pipeline, apply the testall.sh script in the gammalib folder to check all test executables and scripts.

Use the script dev/release_gammalib.sh to build a tarball of the GammaLib release. This is usually done on the kepler.cesr.fr server. Should be moved into Jenkins.

Test that the tarball compiles and all checks run. Type

tar xvf ../gammalib-0.10.0.tar.gz cd gammalib-0.10.0 ./configure make make check

Should be moved into Jenkins.

TBW

Now you can merge the release branch into the master branch using the commands:

git checkout master git merge release

Now you can tag the release using

git tag -a GammaLib-0.10.0 -m "GammaLib release 0.10.0" git push --tags

We use here an annotated tag with a human readable tag message. Please use always the same format. In the above example, the GammaLib version X.Y.Z was 0.10.0.

Now we merge the master branch back in the devel branch to make sure that the devel branch incorporates all modifications that were made during the code release procedure.

git checkout devel git merge release git push

You now should being the devel branch ahead of the master branch. This means that the devel branch should have an additional commit with respect to the master branch. This is not mandatory, but it assures later that the master branch and the devel branch will never point to the same commit. In that way, git clone will always fetch the devel branch, which is the required default behavior.

To bring the devel branch ahead, execute following commands:

git checkout devel nano AUTHORS git add AUTHORS git commit -m "Bring develop branch ahead of master branch after code release."

The nano AUTHORS step opens the file AUTHORS in an editor. Just add or remove a blank line to make a file modification.

Now you’re done!

Updated over 11 years ago by Knödlseder Jürgen

This page summarizes the status of the GammaLib code.

Cppcheck is a static analysis tool for C/C++ code. Unlike C/C++ compilers and many other analysis tools it does not detect syntax errors in the code. Cppcheck primarily detects the types of bugs that the compilers normally do not detect. The goal is to detect only real errors in the code (i.e. have zero false positives).

In the frame below you find the latest Cppcheck result for GammaLib.

In the frame below you find the latest coverage result for GammaLib.

SLOCCount is a set of tools for counting physical Source Lines of Code (SLOC) in a large number of languages of a potentially large set of programs.

In the frame below you find the latest SLOCCount result for GammaLib.

Updated over 11 years ago by Knödlseder Jürgen

This pages summarizes a couple of principles that should be followed during code development.

The necessity of typecasting in using a derived class indicates that the abstract interface of the base class is poorly defined. When developing a new derived class and when you find it necessary to perform typecasting, think about how the interface of the abstract base class could be modified to avoid the typecasting. If you’re not the owner of the abstract base class, speak with the owner about it and work towards an improvement of the interface.

Note that such interface modifications have to be made early in the project, as changing an interface often introduces backward compatibility problems (unless you simply added new method).

Updated over 11 years ago by Knödlseder Jürgen

This pages summarizes some coding techniques that are used in GammaLib.

Interface classes are widely used throughout GammaLib. An interface class is an abstract virtual base class, i.e. all class that only has pure virtual methods. This forces the derives class to implement all the pure virtual methods.

Registries are widely used in GammaLib to collect the various derived classes that may exist for a base class. This allows automatic recognition of GammaLib of all derived classes that exist, and this even without recompilation of the code. One area where registries are widely used are model components. For example, by defining GModelSpectralRegistry class, all spectral models that are available through a registry, allowing for example automatic parsing of an XML file.

We illustrate the mechanism using the GModelSpectralRegistry class. Here an excerpt of the class definition:

class GModelSpectralRegistry {

public:

GModelSpectralRegistry(const GModelSpectral* model);

GModelSpectral* alloc(const std::string& type) const;

private:

static int m_number; //!< Number of models in registry

static std::string* m_names; //!< Model names

static const GModelSpectral** m_models; //!< Pointer to seed models

};

All class members are static, so that every instance of the class gives access to the same data. The static variables are initialised in GModelSpectralRegistry.cpp:int GModelSpectralRegistry::m_number(0); std::string* GModelSpectralRegistry::m_names(0); const GModelSpectral** GModelSpectralRegistry::m_models(0);

m_number counts the number of derived classes that are registered, m_names points to a vector of string elements that contain the unique names of the derived classes, and m_models are pointers to instances of the different derived classes. These instances are also called the seeds.

The central method of the GModelSpectralRegistry class is the alloc() method. This method allocates a new instance of a derived class using the unique name of the class. For example, by specifying

GModelSpectralRegistry registry;

GModelSpectral* spectral = registry("PowerLaw");

the pointer spectral will hold an instance of GModelSpectralPlaw. This is achieved by searching the array of m_names, and if a match is found, cloning the seed instance:

GModelSpectral* GModelSpectralRegistry::alloc(const std::string& type) const

{

GModelSpectral* model = NULL;

for (int i = 0; i < m_number; ++i) {

if (m_names[i] == type) {

model = m_models[i]->clone();

break;

}

}

return model;

}

If the requested model is not found, NULL is returned.

A spectral model is registered to the registry by adding two global variables at the top of the corresponding .cpp file. Here the example for GModelSpectralPlaw.cpp:

const GModelSpectralPlaw g_spectral_plaw_seed; const GModelSpectralRegistry g_spectral_plaw_registry(&g_spectral_plaw_seed);The first line allocates one instance of the class, and the second line stores the address of this instance in the registry using a registry constructor.

Updated over 11 years ago by Knödlseder Jürgen

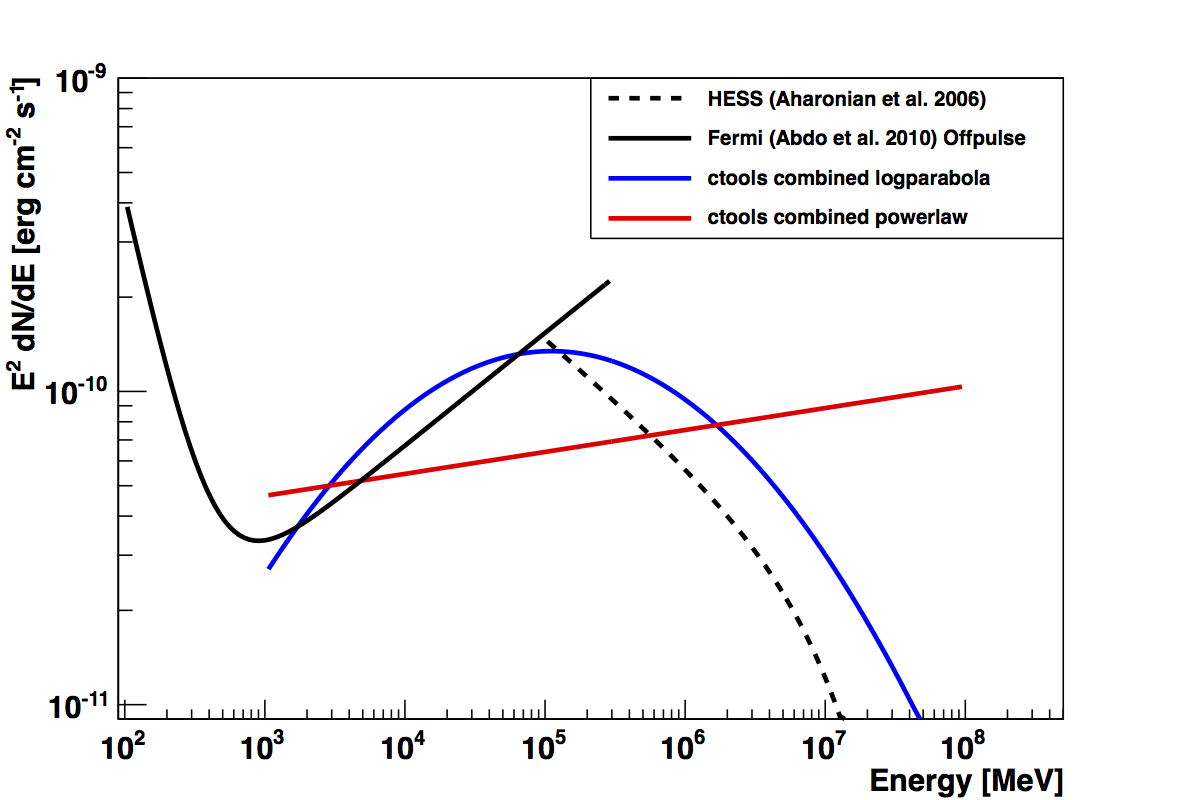

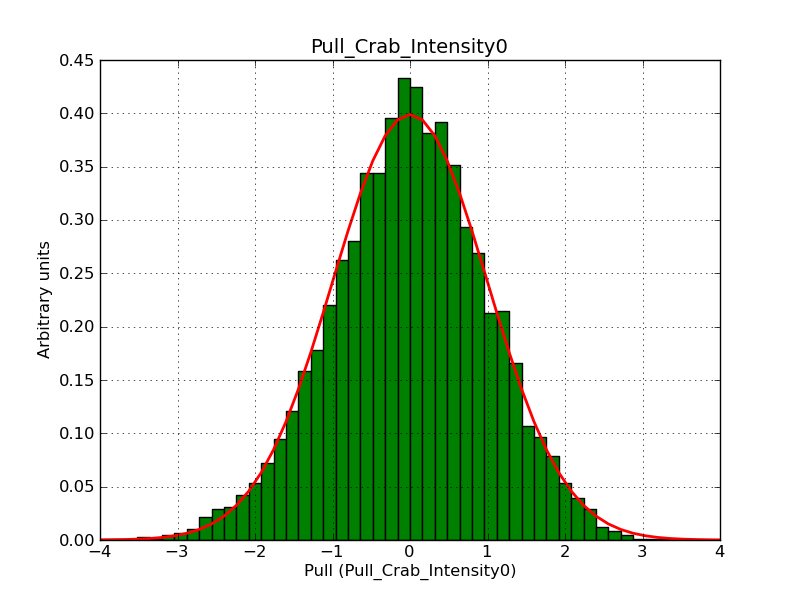

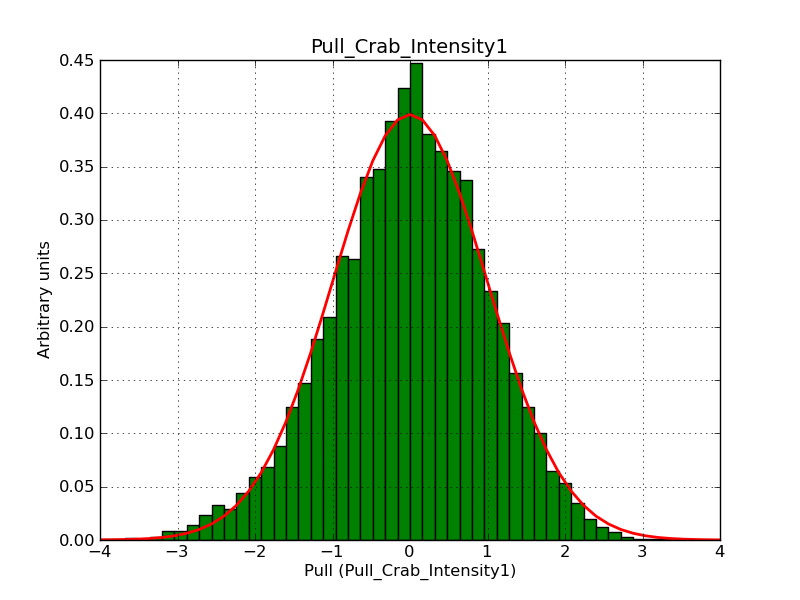

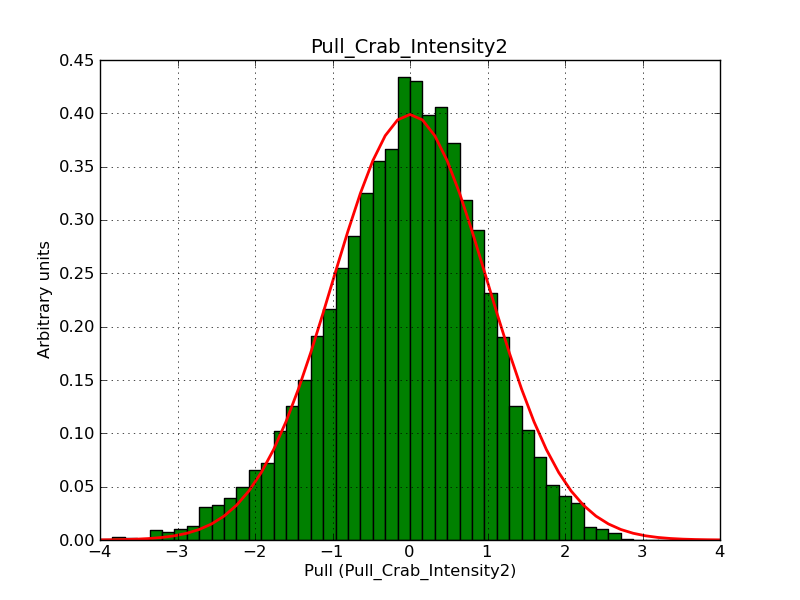

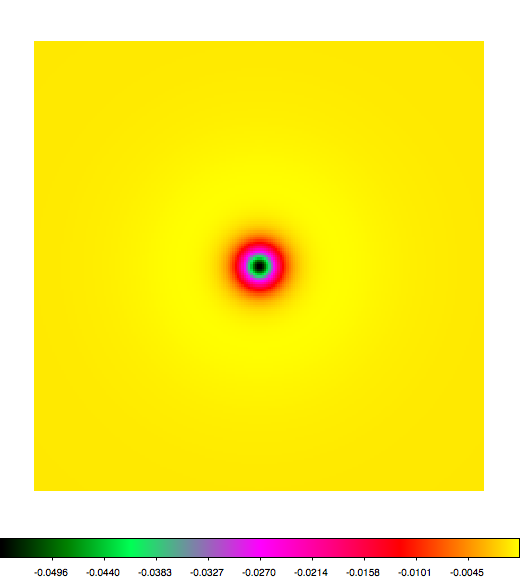

This page summarizes analysis results obtained using GammaLib on the Crab.

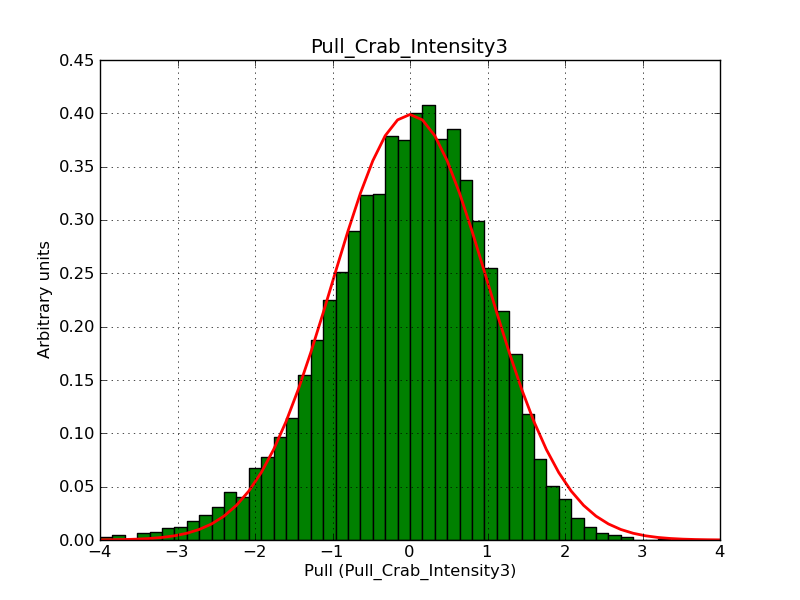

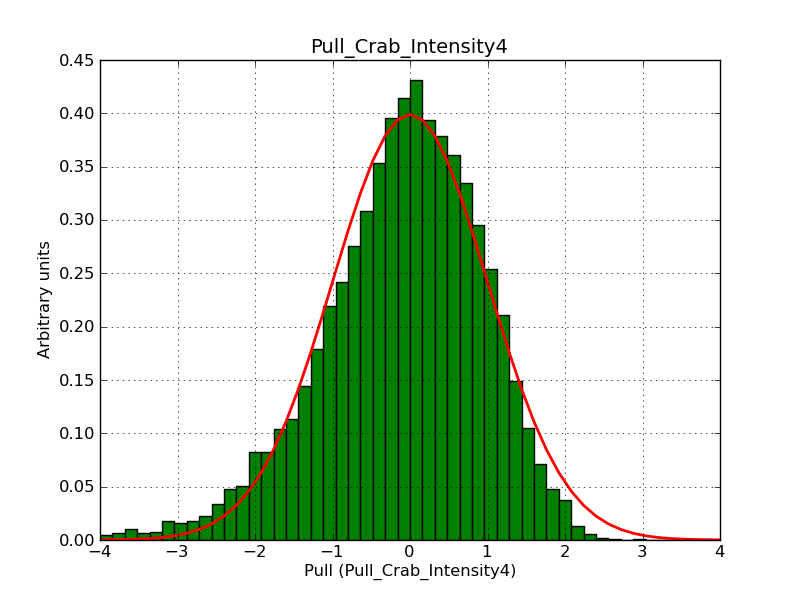

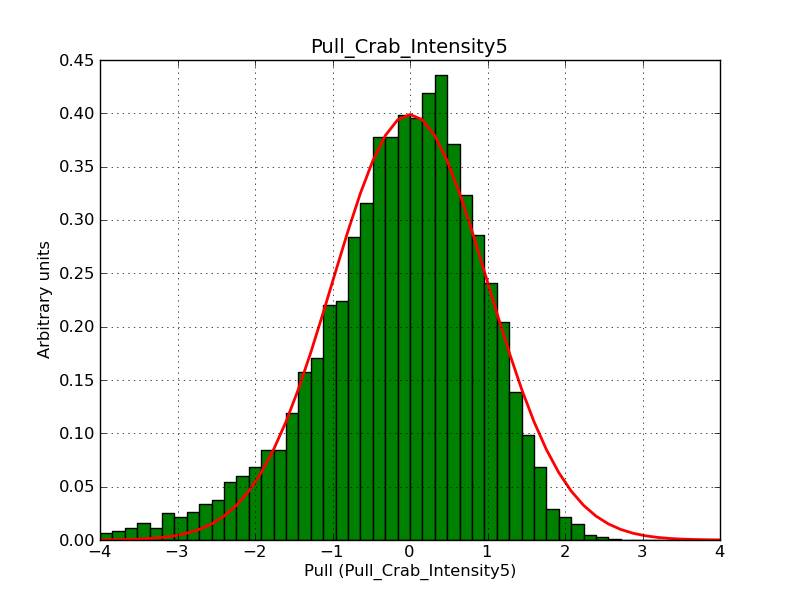

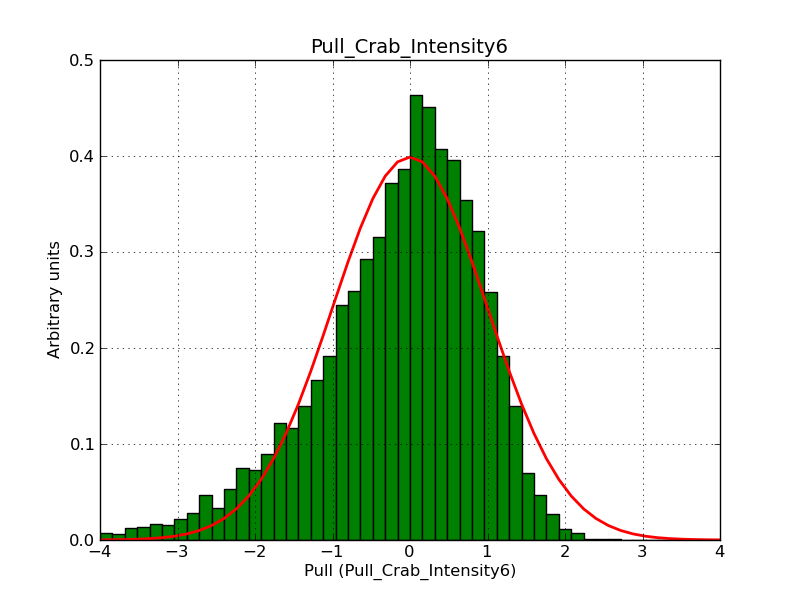

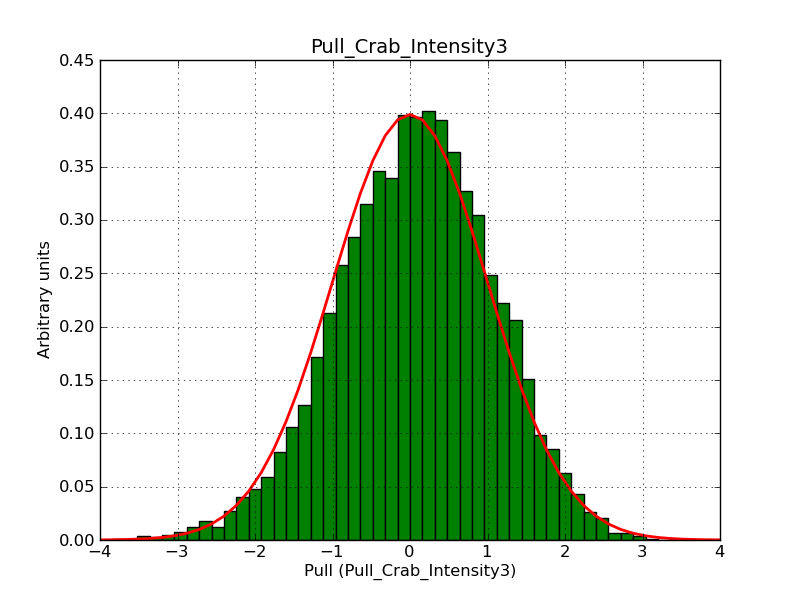

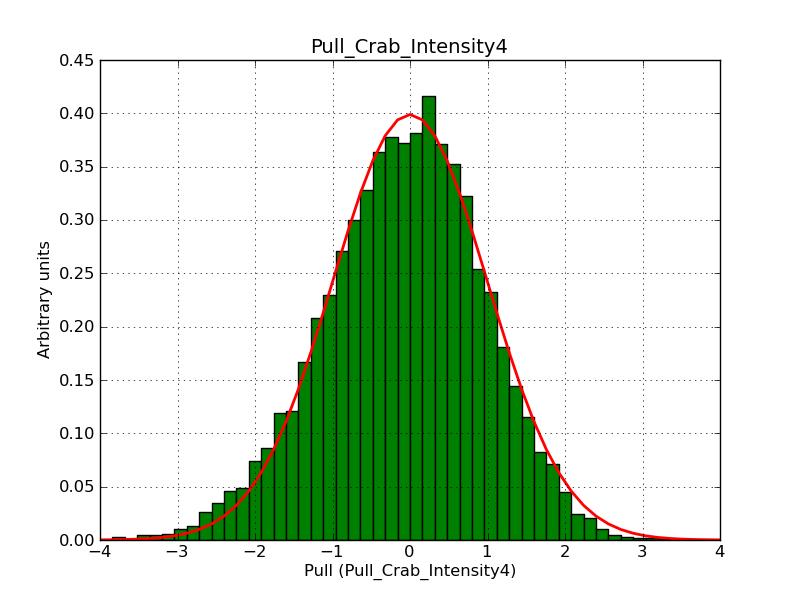

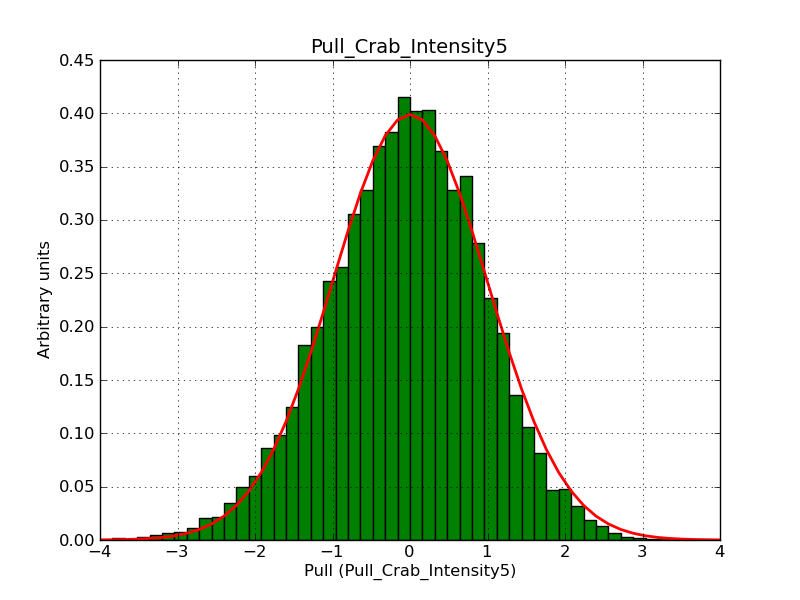

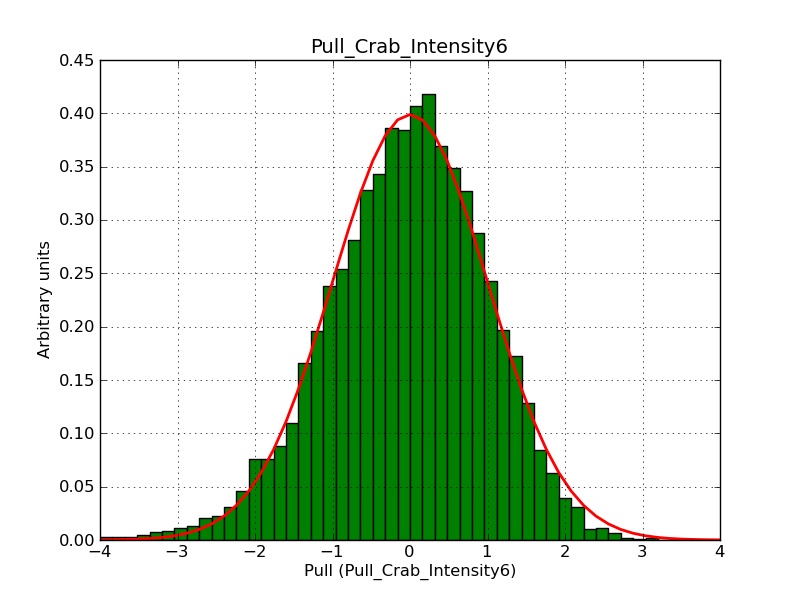

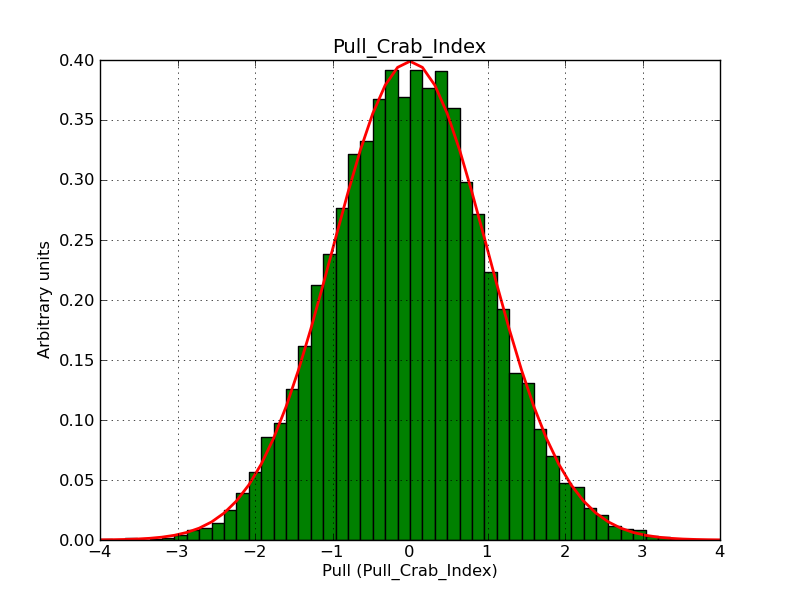

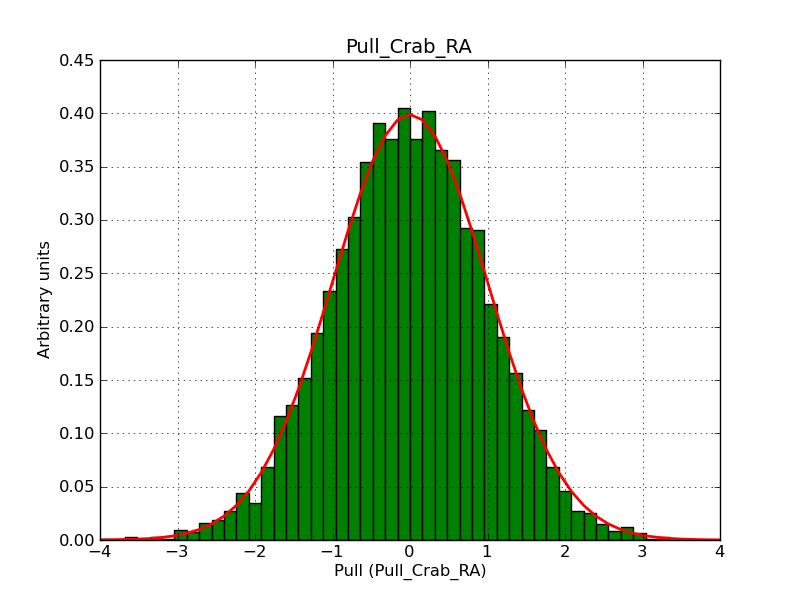

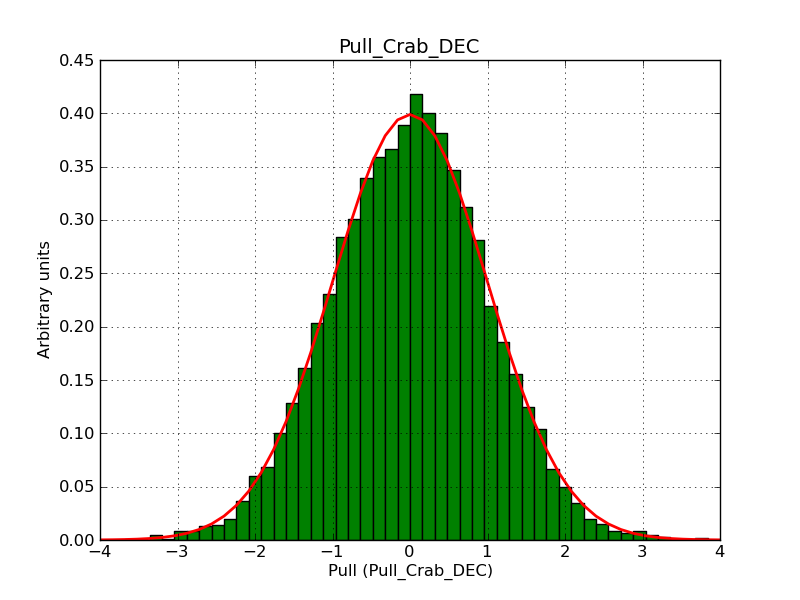

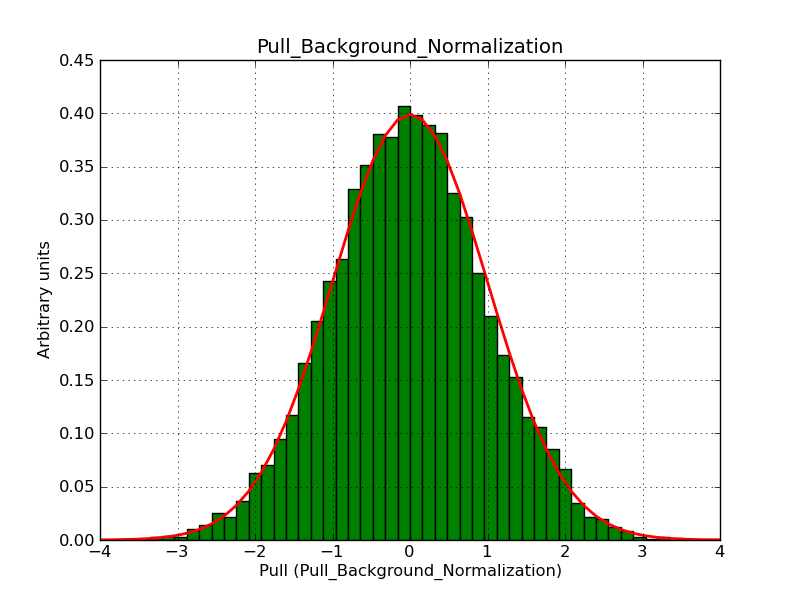

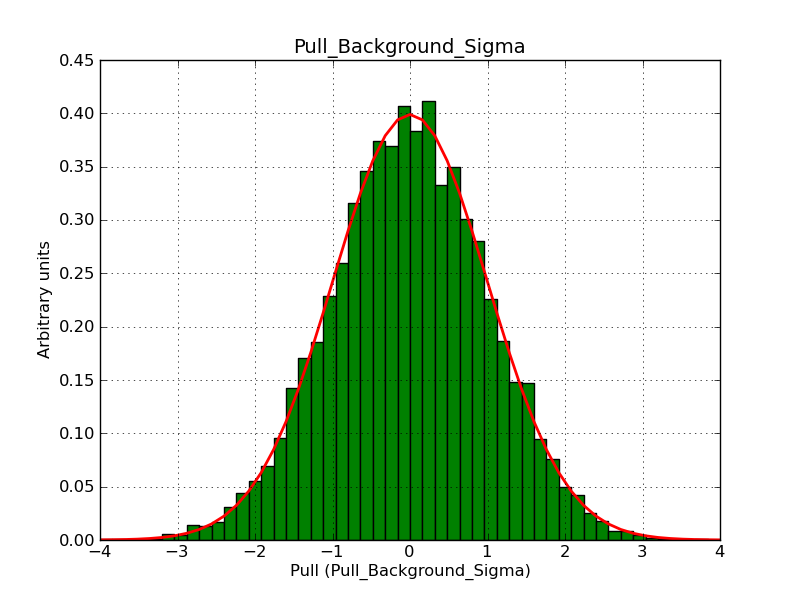

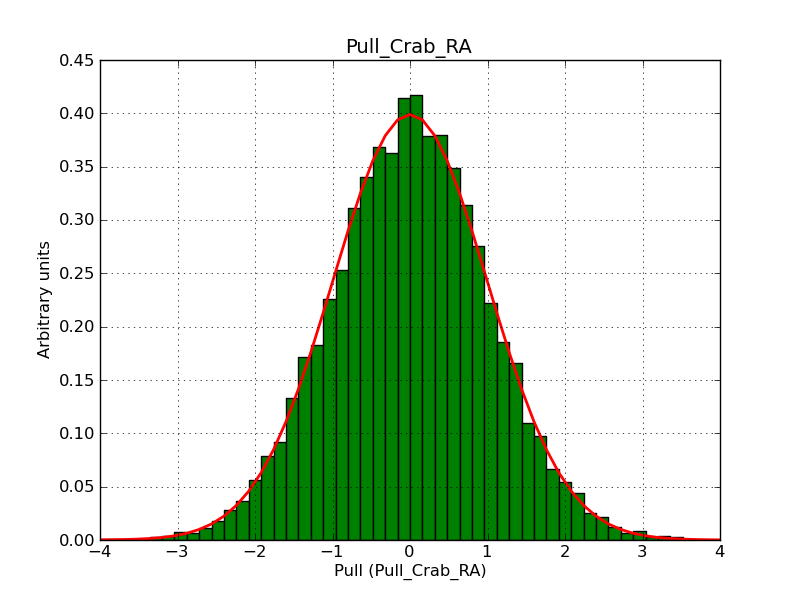

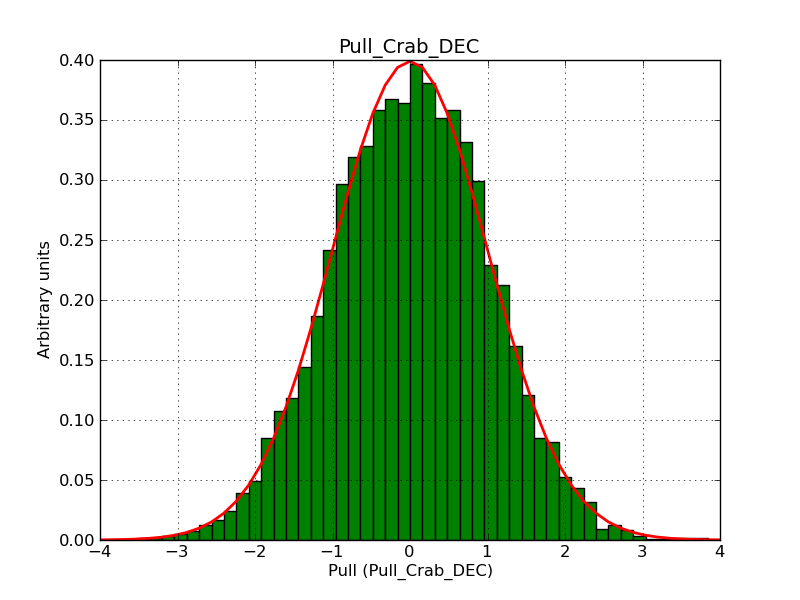

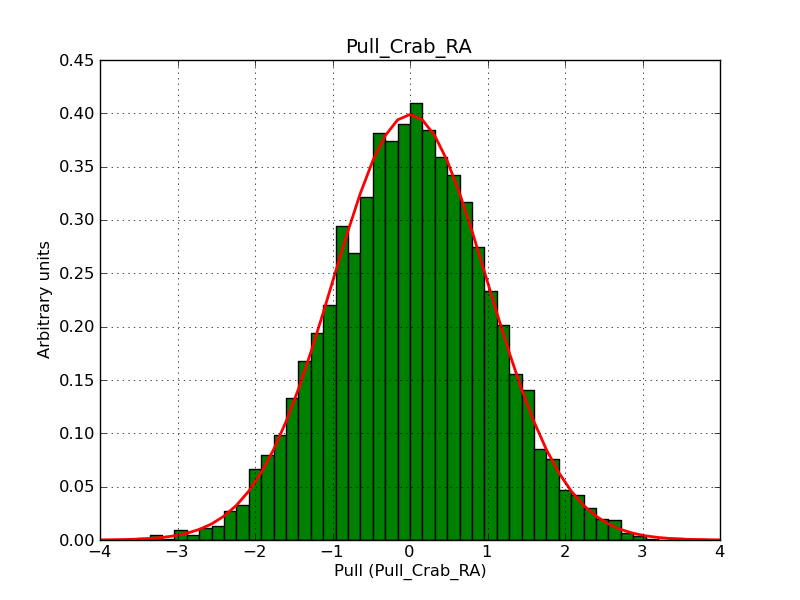

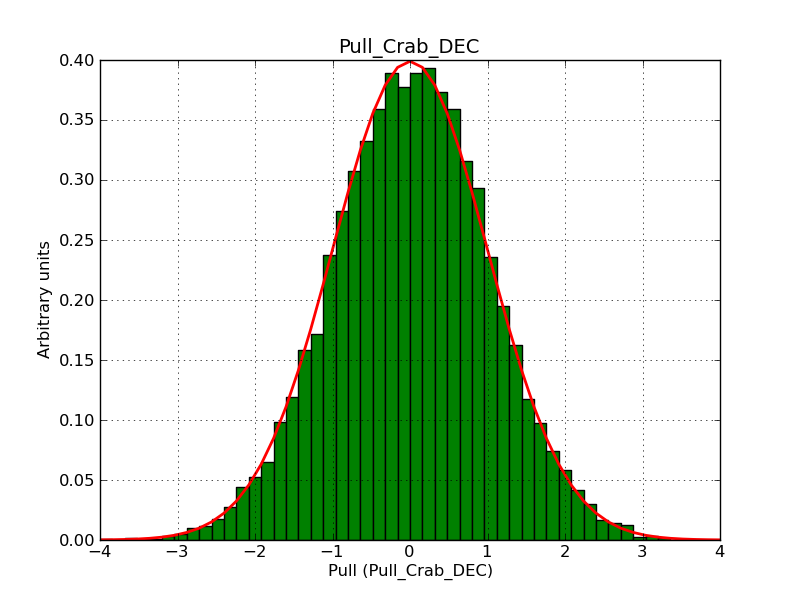

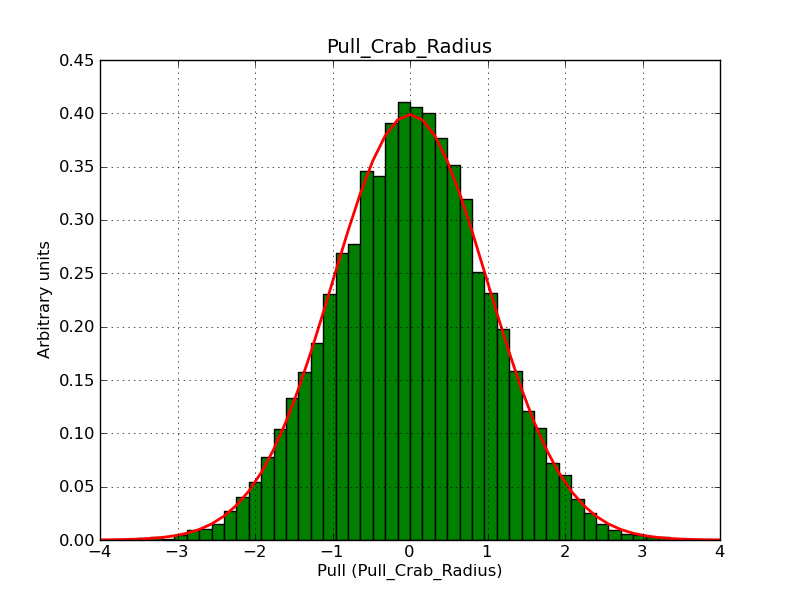

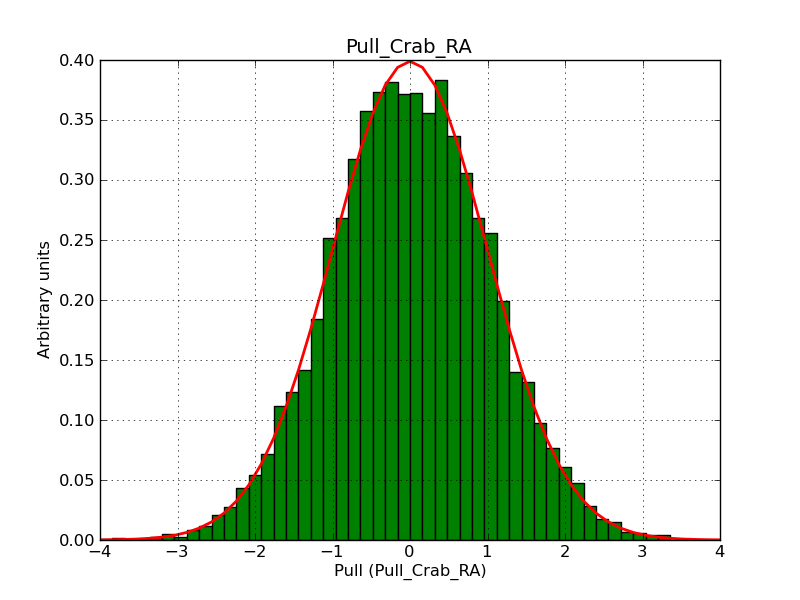

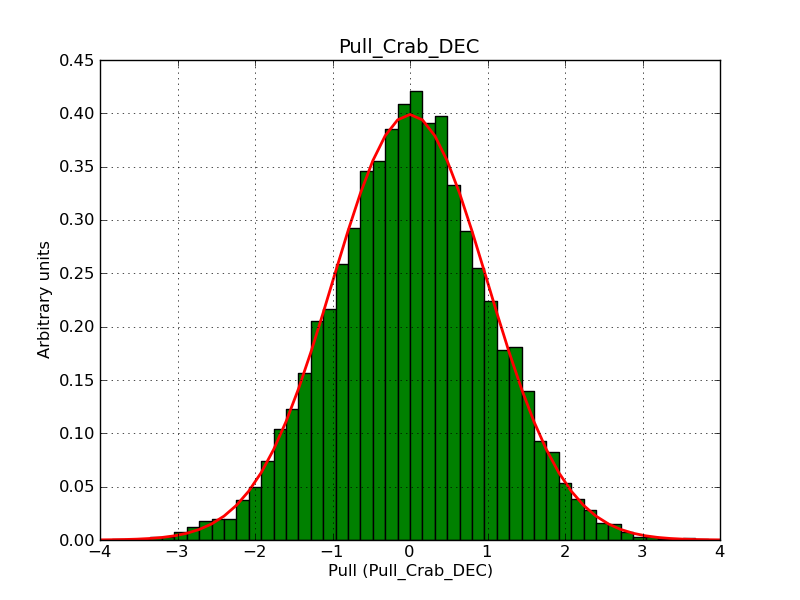

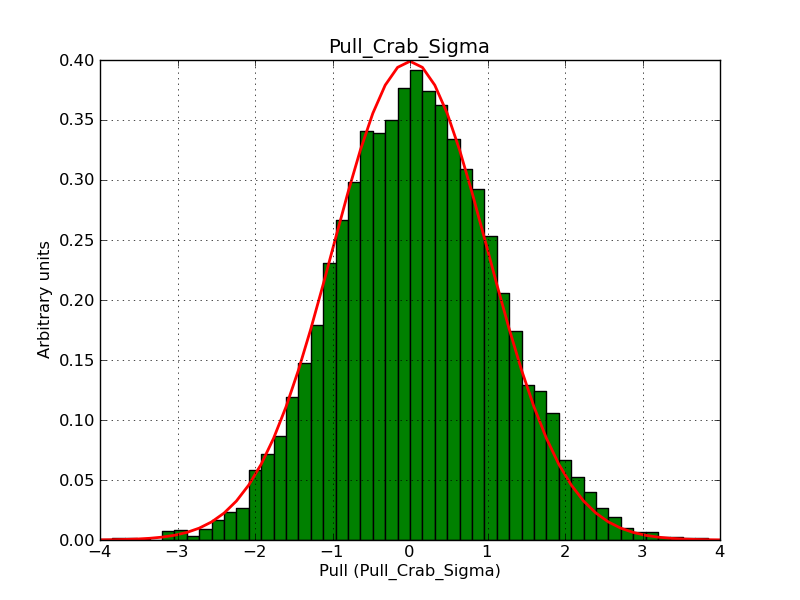

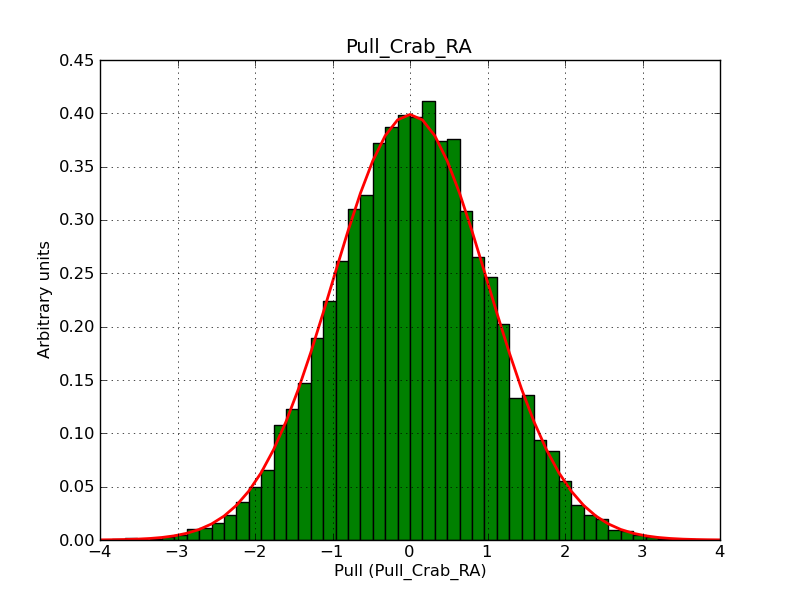

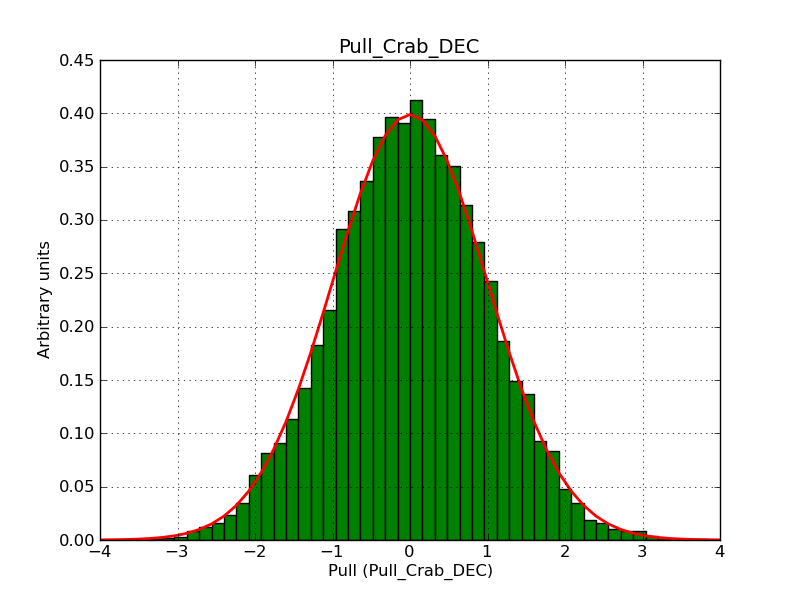

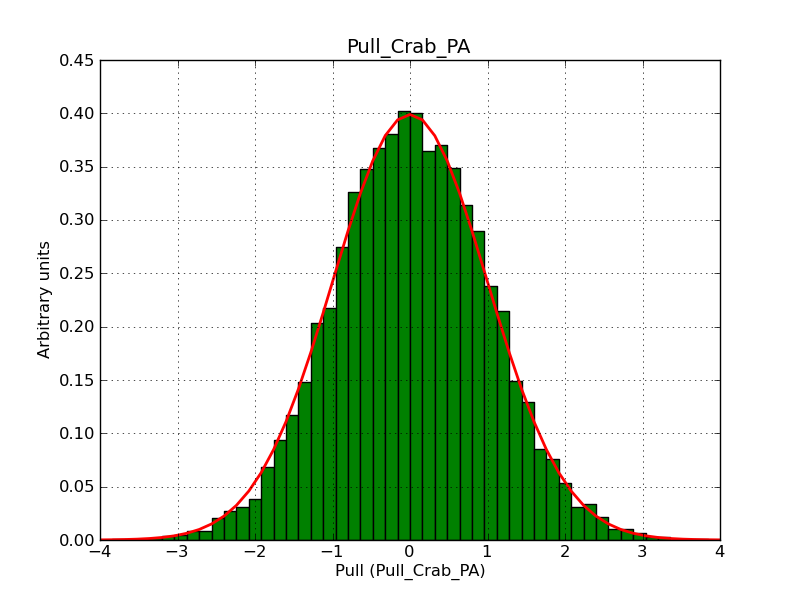

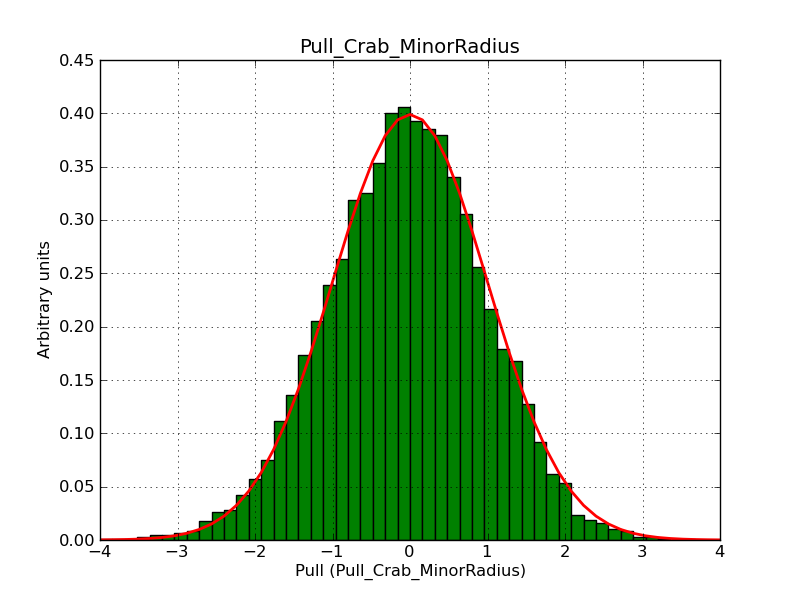

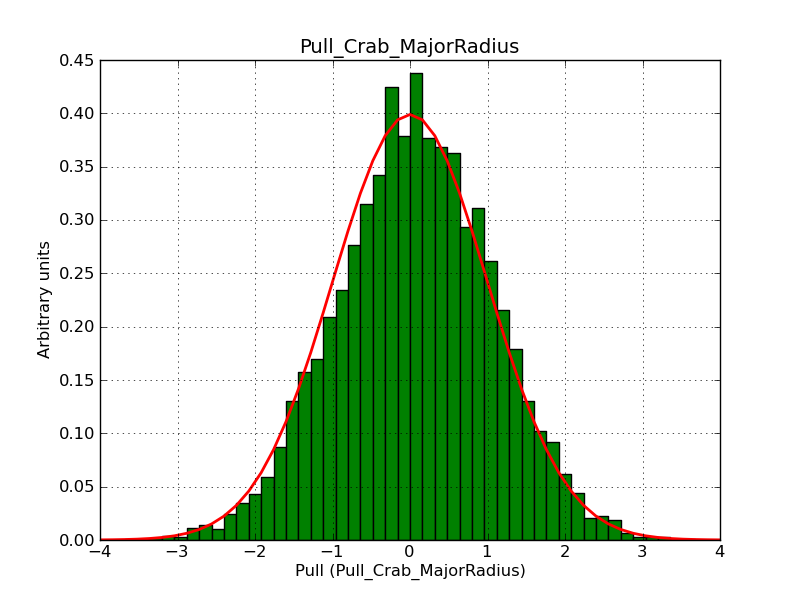

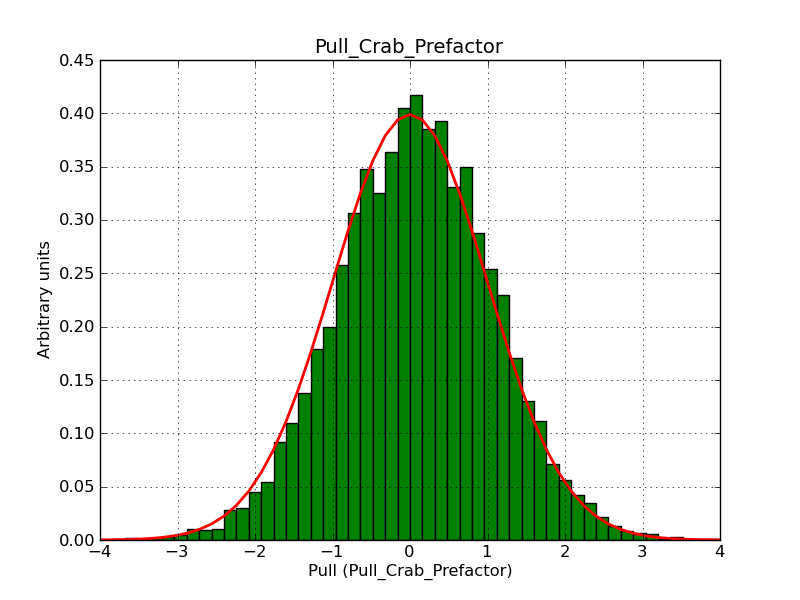

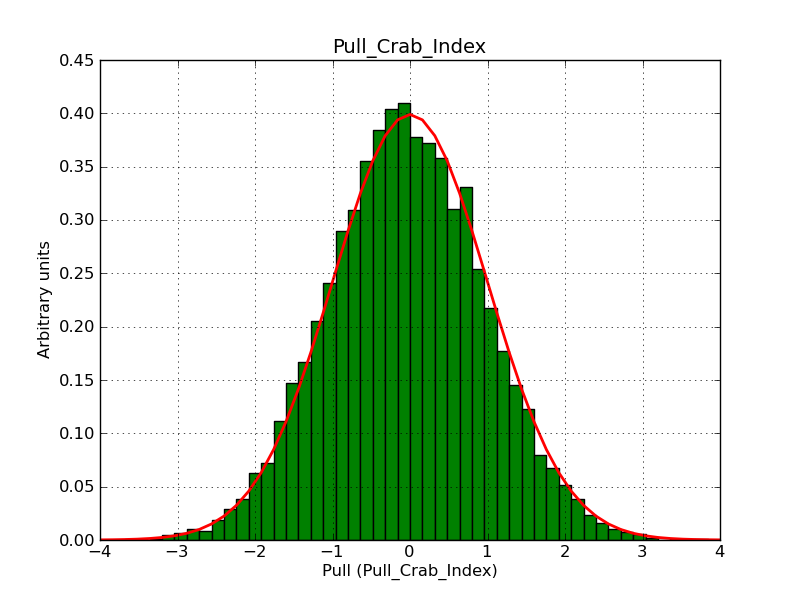

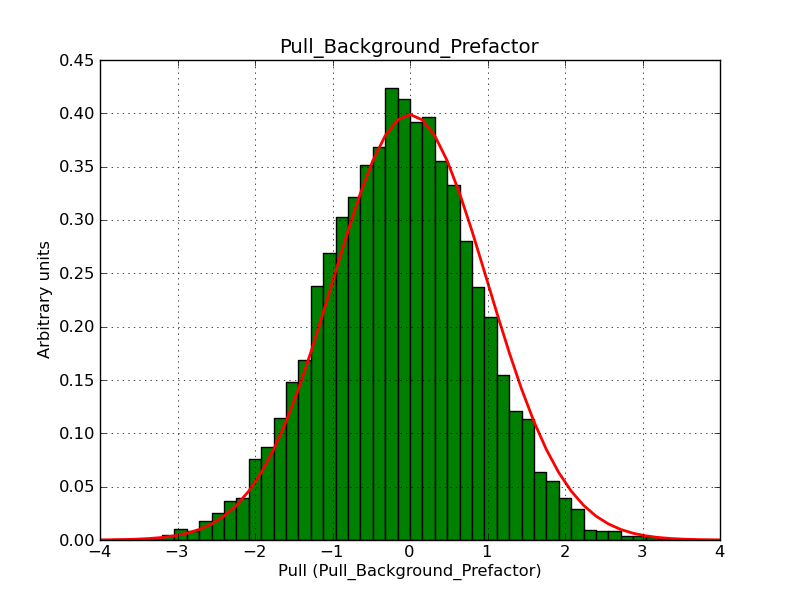

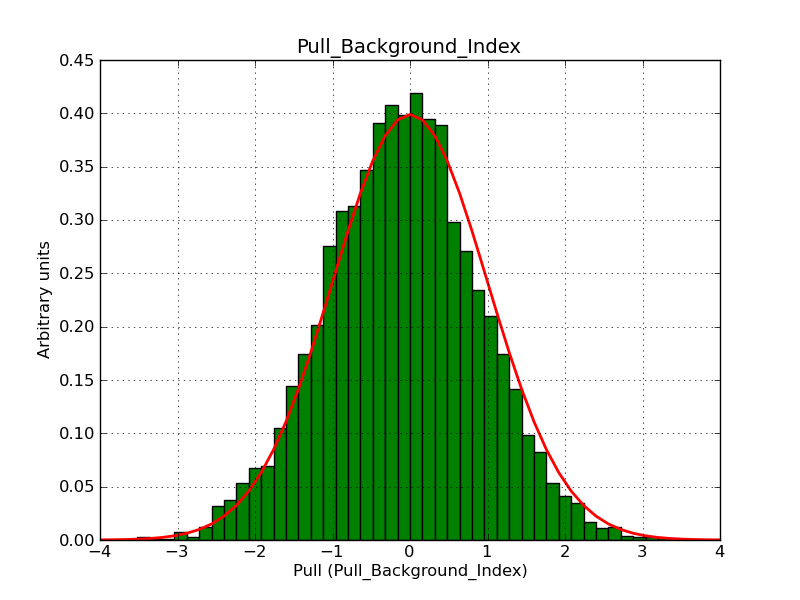

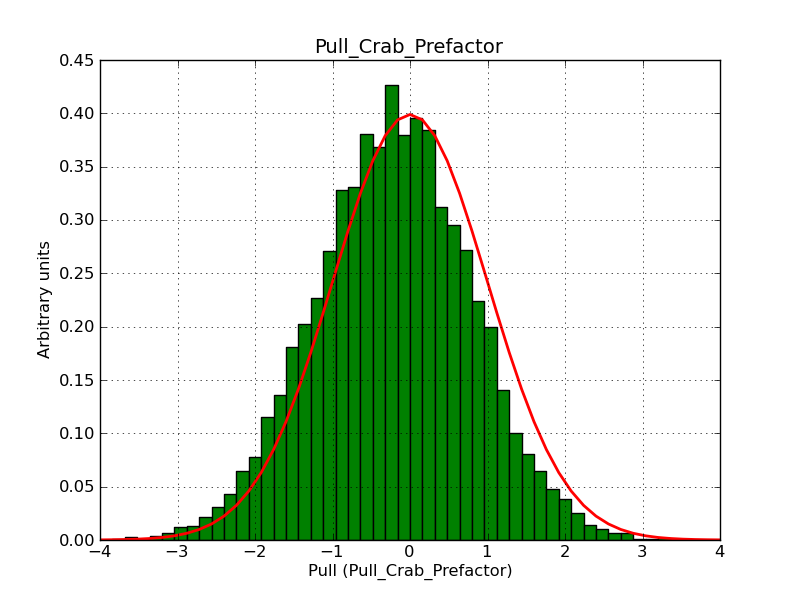

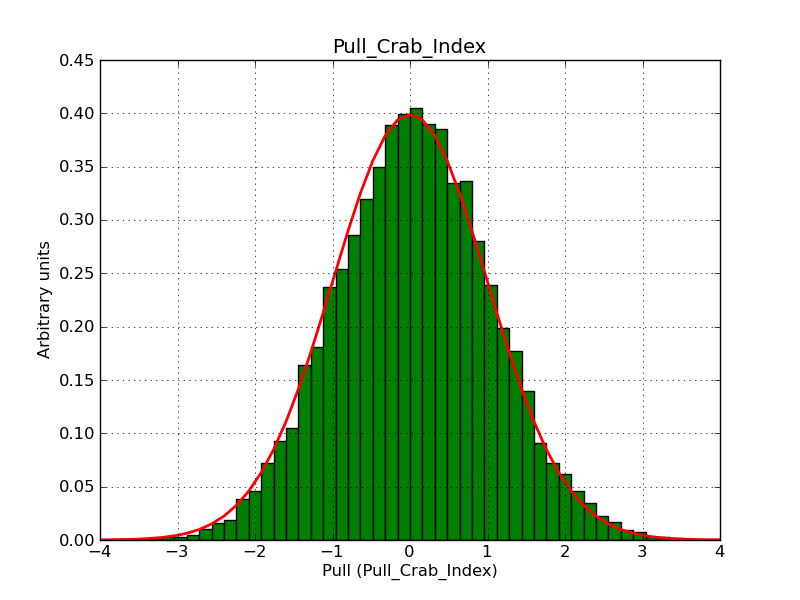

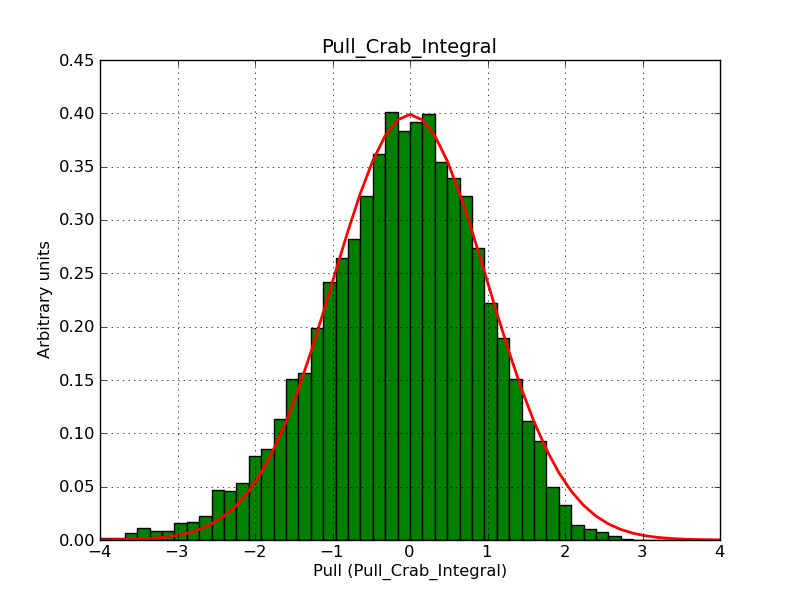

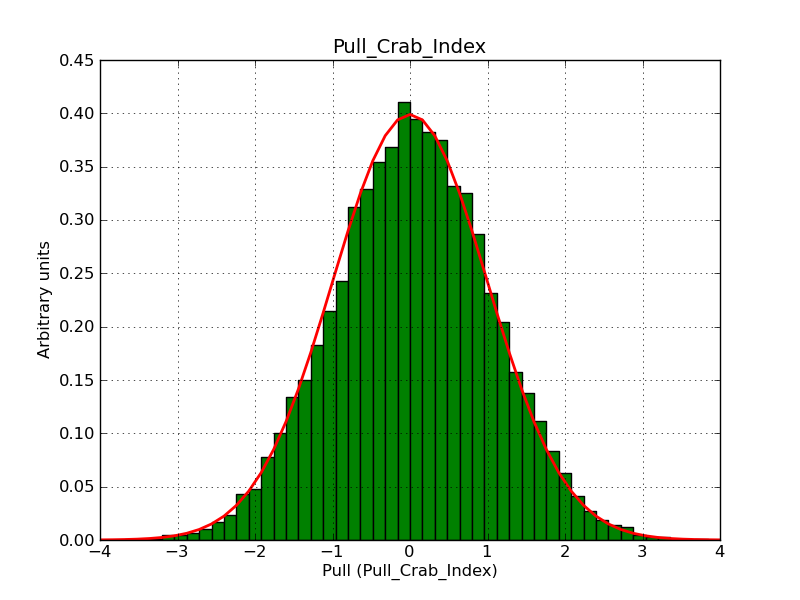

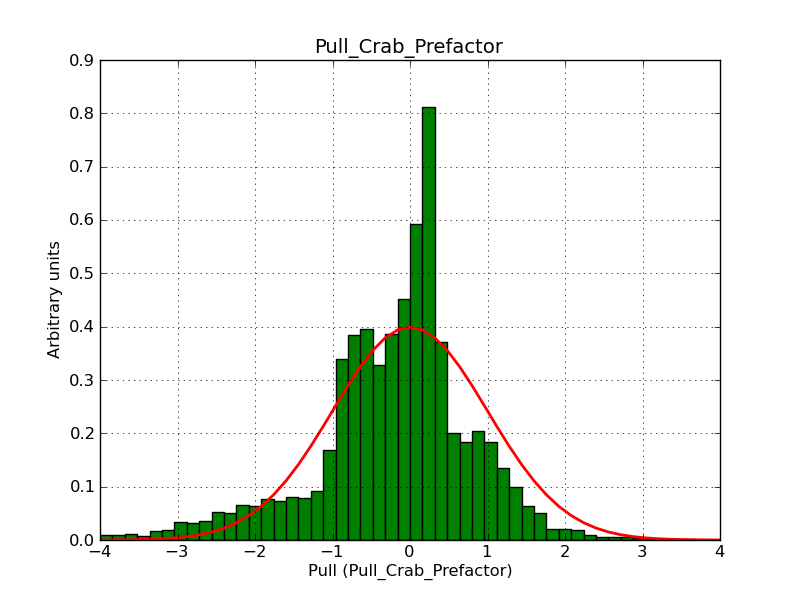

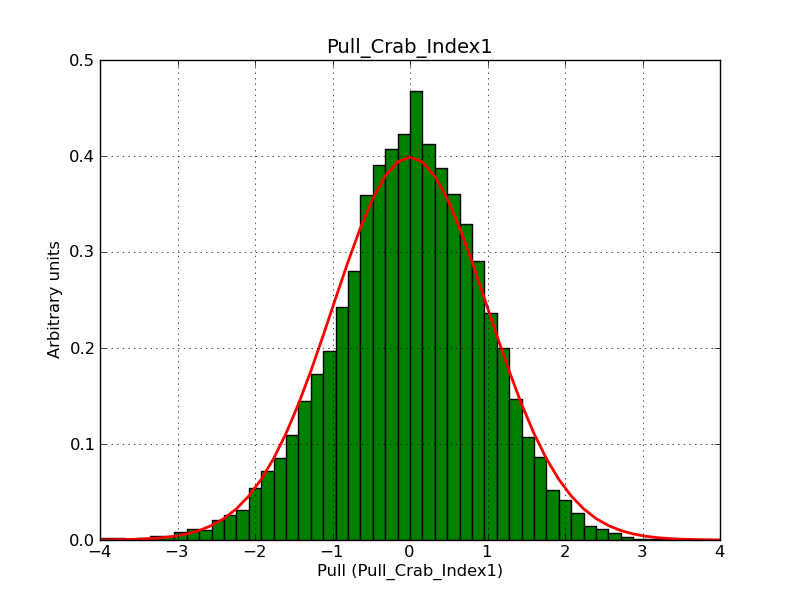

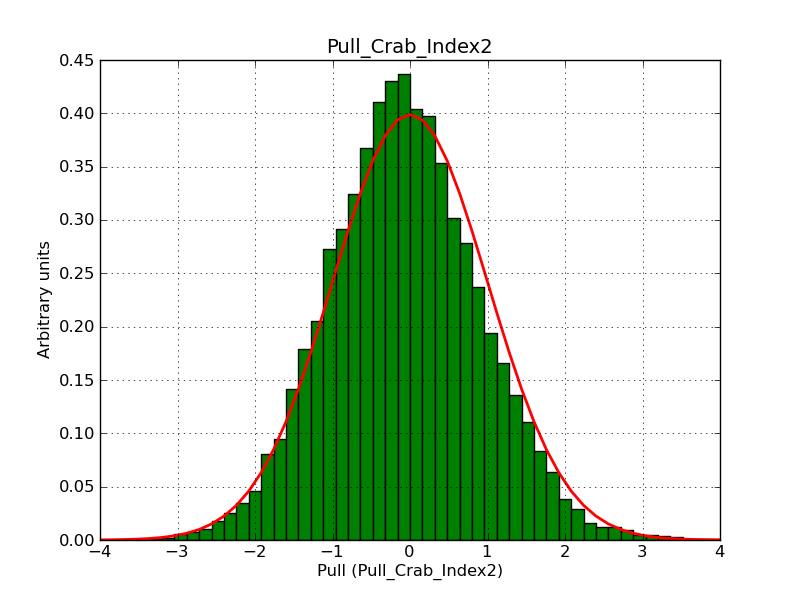

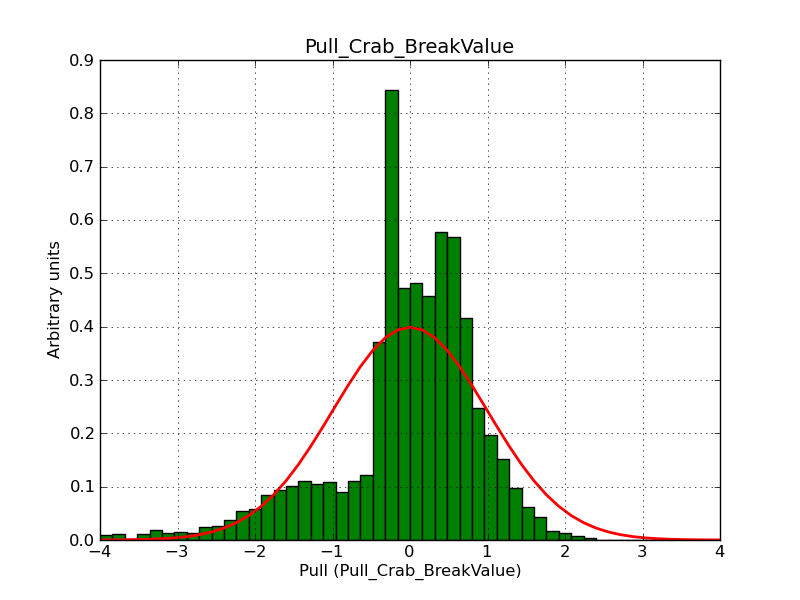

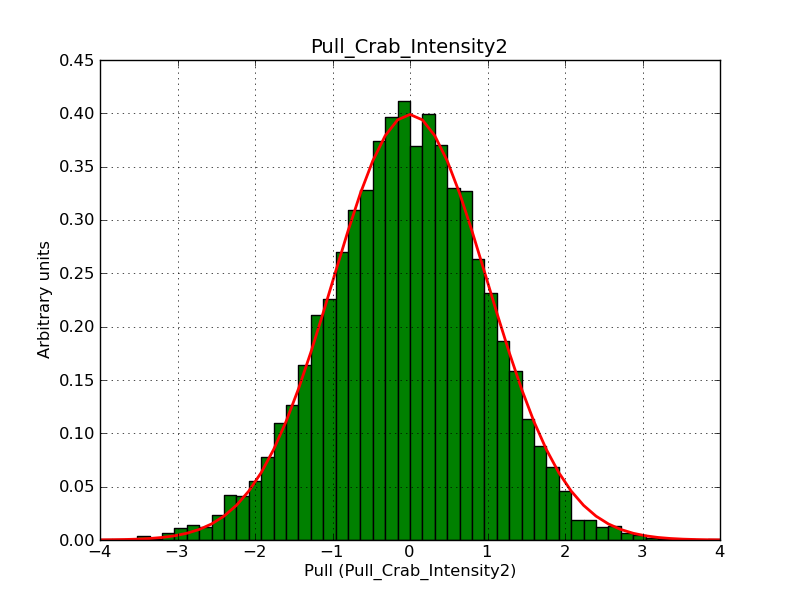

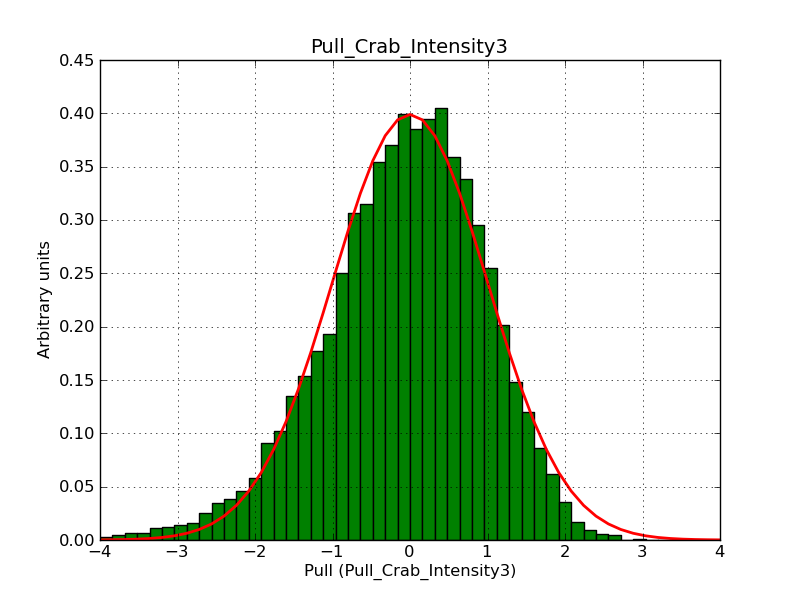

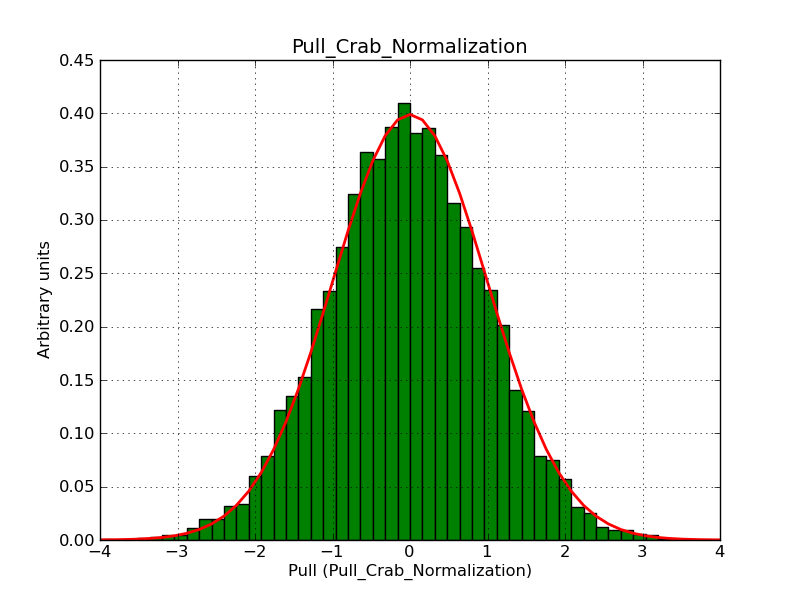

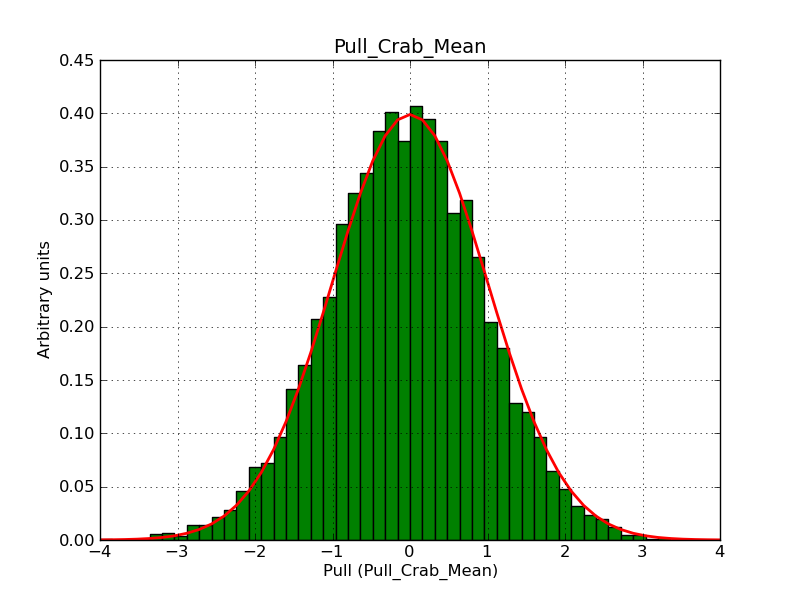

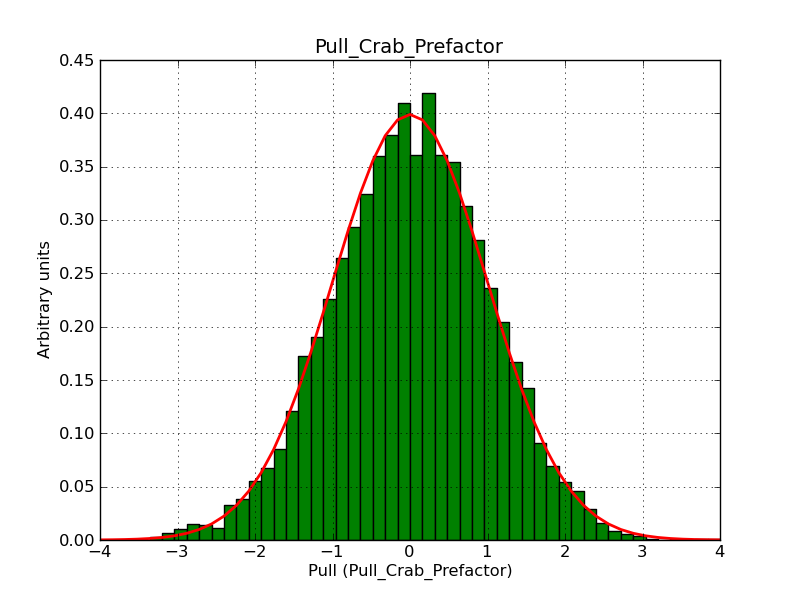

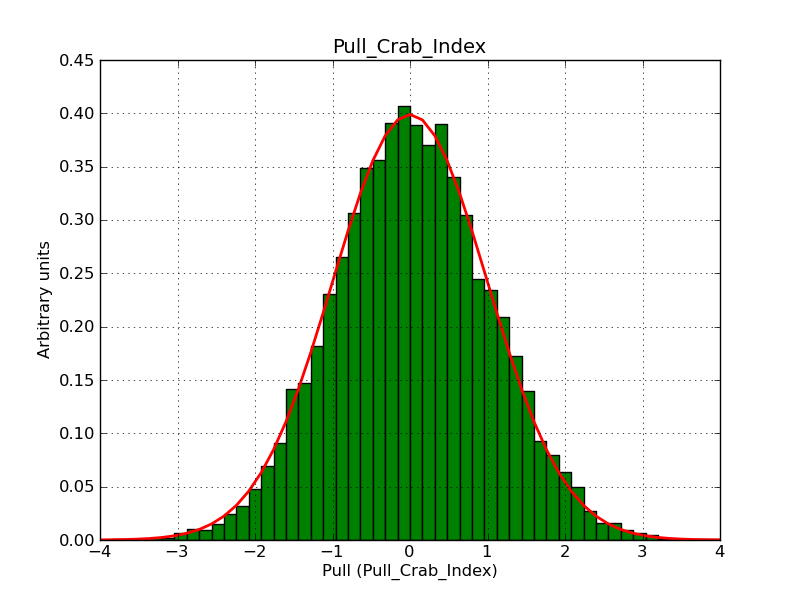

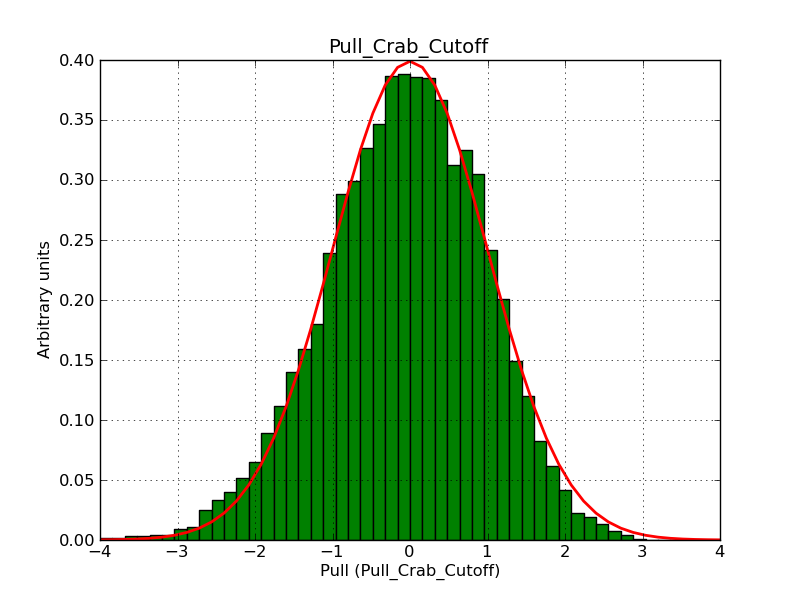

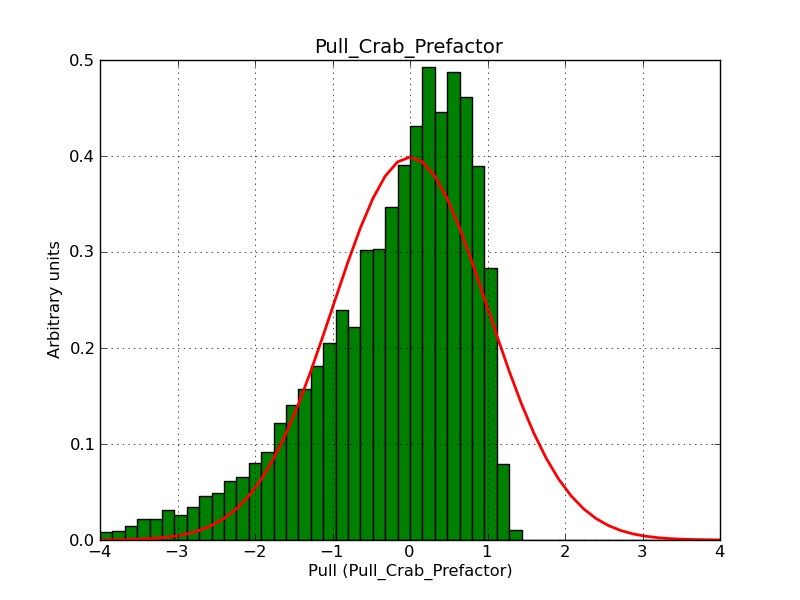

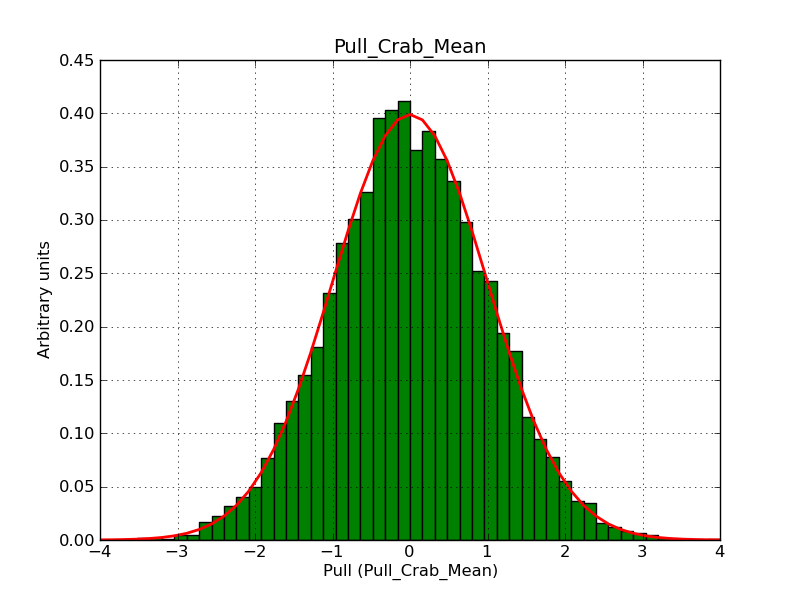

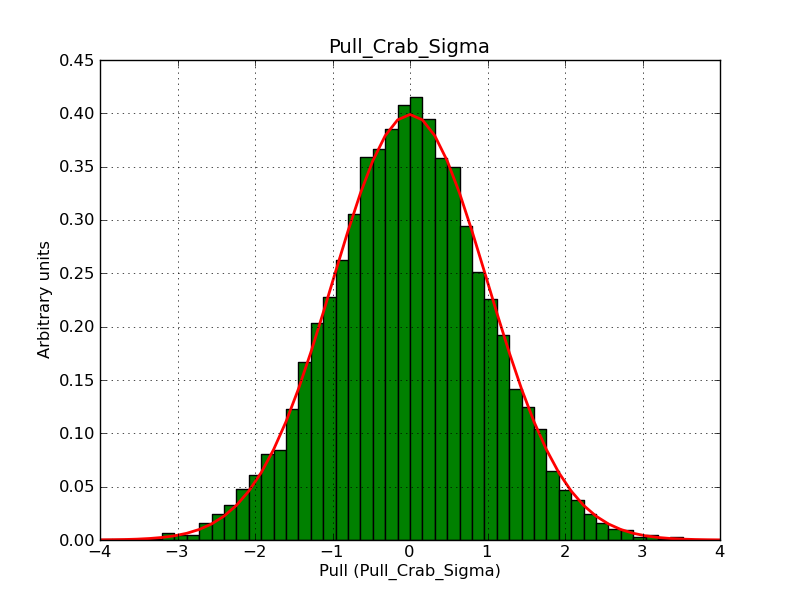

The interface has been validated using the COMPTEL data from Viewing Period 1. This observation was a 14 days pointing on the Crab. The analysis has been performed using GammaLib-00-07-00. The background has been modeled using a Phibar-fitted DRG file. This is only a crude background model, which may explain the discrepancy between nominal and fitted fluxes. The quoted sensitivities and the sensitivity derived by multiplying the statistical uncertainty by a factor of 3 are pretty close. Units are ph/cm2/s.

| Energy | Nominal flux (1) | Fitted flux | Error | Quoted sensitivity (2) | Sensitivity (3*error) |

| 0.7-1 MeV | 5.55e-4 | 8.55e-4 | 5.80e-5 | 2.01e-4 | 1.74e-4 |

| 1-3 MeV | 1.07e-3 | 1.283e-3 | 5.633e-5 | 1.68e-4 | 1.69e-4 |

| 3-10 MeV | 3.98e-4 | 2.42e-4 | 2.48e-5 | 7.3e-5 | 7.43e-4 |

| 10-30 MeV | 1.06e-4 | 5.03e-5 | 8.28e-6 | 2.8e-5 | 2.48e-5 |

Updated over 10 years ago by Knödlseder Jürgen

Here a summary of some benchmarks that have been obtained on a 2.66 GHz Intel Core i7 Mac OS X 10.6.8 system for executing 100000000 (one hundred million) times a given computation (execution times in seconds). The benchmarks have been obtained in double precision and single precision:

| Computation | double | float | Comment |

| pow(x,2) | 1.90 | 1.19 | |

| x*x | 0.49 | 0.48 | Prefer multiplication over pow(x,2) |

| pow(x,2.01) | 7.96 | 4.19 | pow is a very time consuming operation |

| x/a | 0.98 | 1.22 | |

| x*b (where b=1/a) | 0.46 | 0.66 | Prefer multiplication by the inverse over division |

| x+1.5 | 0.40 | 0.40 | |

| x-1.5 | 0.49 | 0.49 | Prefer addition over subtraction |

sin |

4.66 | 2.39 | |

cos |

4.64 | 2.46 | |

tan |

5.40 | 2.84 | tan is pretty time consuming |

acos |

2.18 | 0.94 | |

sqrt |

1.29 | 1.37 | |

log10 |

2.60 | 2.48 | |

log |

2.72 | 2.33 | |

exp |

7.17 | 7.20 | exp is a very time consuming operation (comparable to pow) |

Note that pow, exp and the trigonometric functions are significantly (a factor of about 2) faster using single precision compared to double precision.

And here a comparison for various computing systems (double precision). In this comparison, the Mac is about the fastest, galileo (which is a 32 Bit system) is pretty fast for multiplications, kepler is the laziest (AMD related? Multi-core related?), fermi and the CI13 virtual box are about the same (there is no notable difference between gcc and clang on the virtual box).

| Computation | Mac OS X | galileo | kepler | dirac | fermi | CI13 (gcc 4.8.0) | CI13 (clang 3.1) |

| pow(x,2) | 1.90 | 5.73 | 4.83 | 3.5 | 2.65 | 1.94 | 1.99 |

| x*x | 0.49 | 0.31 | 1.04 | 1.06 | 0.5 | 0.58 | 0.57 |

| pow(x,2.01) | 7.96 | 10.96 | 17.53 | 17.73 | 11.11 | 8.71 | 8.44 |

| x/a | 0.98 | 1.24 | 1.87 | 1.92 | 1.03 | 1.15 | 1.16 |

| x*b (where b=1/a) | 0.46 | 0.27 | 0.99 | 0.99 | 0.51 | 0.54 | 0.54 |

| x+1.5 | 0.40 | 0.27 | 0.96 | 1.02 | 0.43 | 0.47 | 0.47 |

| x-1.5 | 0.49 | 0.27 | 1.08 | 1.1 | 0.57 | 0.47 | 0.47 |

sin |

4.66 | 4.76 | 10.46 | 10.44 | 6.72 | 5.62 | 5.52 |

cos |

4.64 | 4.68 | 10.16 | 10.28 | 6.35 | 5.65 | 5.62 |

tan |

5.40 | 6.27 | 15.23 | 15.4 | 8.61 | 8.11 | 7.98 |

acos |

2.18 | 9.57 | 7.49 | 7.75 | 4.48 | 3.86 | 2.93 |

sqrt |

1.29 | 3.29 | 2.33 | 2.4 | 0.97 | 2.02 | 1.84 |

log10 |

2.60 | 5.33 | 12.91 | 12.58 | 7.71 | 6.54 | 6.47 |

log |

2.72 | 5.15 | 10.64 | 10.66 | 6.32 | 5.26 | 5.09 |

exp |

7.17 | 10 | 4.78 | 4.8 | 1.85 | 2.03 | 2.02 |

Underlined numbers show the fastest, bold numbers the slowest computations.

And the same for single precision:

| Computation | Mac OS X | galileo | kepler | dirac | fermi | CI13 (gcc 4.8.0) | CI13 (clang 3.1) |

| pow(x,2) | 1.19 | 1.77 | 3.27 | 3 | 1.35 | 1.54 | 0.9 |

| x*x | 0.48 | 0.3 | 0.99 | 1 | 0.47 | 0.54 | 0.54 |

| pow(x,2.01) | 4.19 | 10.64 | 29.81 | 30.21 | 14.42 | 13 | 12.29 |

| x/a | 1.22 | 1.24 | 2.77 | 2.79 | 1.2 | 1.37 | 1.4 |

| x*b (where b=1/a) | 0.66 | 0.27 | 1.72 | 1.74 | 0.67 | 0.76 | 0.79 |

| x+1.5 | 0.40 | 0.27 | 1.03 | 1.04 | 0.4 | 0.46 | 0.47 |

| x-1.5 | 0.49 | 0.27 | 1.13 | 1.14 | 0.54 | 0.47 | 0.47 |

sin |

2.39 | 4.92 | 116.41 | 119.06 | 54 | 41.2 | 40.22 |

cos |

2.46 | 4.85 | 116.47 | 119.27 | 53.93 | 40.91 | 40.3 |

tan |

2.84 | 6.47 | 120.69 | 122 | 55.14 | 42.36 | 41.83 |

acos |

0.94 | 9.02 | 8.6 | 8.71 | 3.86 | 2.81 | 2.38 |

sqrt |

1.37 | 2.27 | 3.77 | 3.75 | 1.5 | 1.84 | 1.55 |

log10 |

2.48 | 4.15 | 12.74 | 12.59 | 6.28 | 5.74 | 4.97 |

log |

2.33 | 3.83 | 10.07 | 10.42 | 5.16 | 4.88 | 4.11 |

exp |

7.20 | 9.96 | 17.51 | 18.32 | 10.77 | 10.21 | 10.18 |

Note the enormous speed penalty of trigonometric functions on most of the systems. Floating point arithmetics are only faster on Mac OS X.

Here the specifications of the machines used for benchmarking:Here now some information to understand what happens.

std::sin(double)std::sin(float)sin(double)sin(float)It turned out that the call to std::sin(float) calls the function sinf, while all other codes call sin. The execution time difference is therefore related to different implementations of sin and sinf on Kepler.

Note that sin and sinf are implement in /lib64/libm.so.6 on Kepler. This library is part of the GNU C library libc (see http://www.gnu.org/software/libc/).

When std::sin(double) is used, the sin function will be called by the processor. Note that the same behavior is obtained when calling sin(double) (without the std prefix).

$ nano stdsin.cpp

#include <cmath>

int main(void)

{

double arg = 1.0;

double result = std::sin(arg);

return 0;

}

$ g++ -S stdsin.cpp

$ more stdsin.s

main:

.LFB97:

pushq %rbp

.LCFI0:

movq %rsp, %rbp

.LCFI1:

subq $32, %rsp

.LCFI2:

movabsq $4607182418800017408, %rax

movq %rax, -16(%rbp)

movq -16(%rbp), %rax

movq %rax, -24(%rbp)

movsd -24(%rbp), %xmm0

call sin

movsd %xmm0, -24(%rbp)

movq -24(%rbp), %rax

movq %rax, -8(%rbp)

movl $0, %eax

leave

ret

When std::sin(float) is used, the sinf function will be called by the processor.

$ nano floatstdsin.cpp

#include <cmath>

int main(void)

{

float arg = 1.0;

float result = std::sin(arg);

return 0;

}

$ g++ -S floatstdsin.cpp

$ more floatstdsin.s

main:

.LFB97:

pushq %rbp

.LCFI3:

movq %rsp, %rbp

.LCFI4:

subq $32, %rsp

.LCFI5:

movl $0x3f800000, %eax

movl %eax, -8(%rbp)

movl -8(%rbp), %eax

movl %eax, -20(%rbp)

movss -20(%rbp), %xmm0

call _ZSt3sinf

movss %xmm0, -20(%rbp)

movl -20(%rbp), %eax

movl %eax, -4(%rbp)

movl $0, %eax

leave

ret

_ZSt3sinf:

.LFB57:

pushq %rbp

.LCFI0:

movq %rsp, %rbp

.LCFI1:

subq $16, %rsp

.LCFI2:

movss %xmm0, -4(%rbp)

movl -4(%rbp), %eax

movl %eax, -12(%rbp)

movss -12(%rbp), %xmm0

call sinf

movss %xmm0, -12(%rbp)

movl -12(%rbp), %eax

movl %eax, -12(%rbp)

movss -12(%rbp), %xmm0

leave

ret

When sin(float) is used, the compiler will perform an implicit conversion to double and then call the sin function.

$ nano floatsin.cpp

#include <cmath>

int main(void)

{

float arg = 1.0;

float result = sin(arg);

return 0;

}

$ g++ -S floatsin.cpp

$ more floatsin.s

main:

.LFB97:

pushq %rbp

.LCFI0:

movq %rsp, %rbp

.LCFI1:

subq $16, %rsp

.LCFI2:

movl $0x3f800000, %eax

movl %eax, -8(%rbp)

cvtss2sd -8(%rbp), %xmm0

call sin

cvtsd2ss %xmm0, %xmm0

movss %xmm0, -4(%rbp)

movl $0, %eax

leave

ret

And now the same experiment on Mac OS X. It turns out that the code generated by the compiler has the same structure, and the functions that are called are again _sin and _sinf (all function names have a _ prepended on Mac OS X). This means that the implementation of the _sinf function on Mac OS X is considerably faster than the implementation on kepler.

Note that _sin and _sinf are implement in /usr/lib/libSystem.B.dylib on my Mac OS X.

When std::sin(double) is used, the _sin function will be called by the processor. Note that the same behavior is obtained when calling sin(double) (without the std prefix).

$ nano stdsin.cpp

#include <cmath>

int main(void)

{

double arg = 1.0;

double result = std::sin(arg);

return 0;

}

$ g++ -S stdsin.cpp

$ more stdsin.s

_main:

LFB127:

pushq %rbp

LCFI0:

movq %rsp, %rbp

LCFI1:

subq $16, %rsp

LCFI2:

movabsq $4607182418800017408, %rax

movq %rax, -8(%rbp)

movsd -8(%rbp), %xmm0

call _sin

movsd %xmm0, -16(%rbp)

movl $0, %eax

leave

ret

When std::sin(float) is used, the _sinf function will be called by the processor.

$ nano floatstdsin.cpp

#include <cmath>

int main(void)

{

float arg = 1.0;

float result = std::sin(arg);

return 0;

}

$ g++ -S floatstdsin.cpp

$ more floatstdsin.s

_main:

LFB127:

pushq %rbp

LCFI3:

movq %rsp, %rbp

LCFI4:

subq $16, %rsp

LCFI5:

movl $0x3f800000, %eax

movl %eax, -4(%rbp)

movss -4(%rbp), %xmm0

call __ZSt3sinf

movss %xmm0, -8(%rbp)

movl $0, %eax

leave

ret

LFB87:

pushq %rbp

LCFI0:

movq %rsp, %rbp

LCFI1:

subq $16, %rsp

LCFI2:

movss %xmm0, -4(%rbp)

movss -4(%rbp), %xmm0

call _sinf

leave

ret

When sin(float) is used, the compiler will perform an implicit conversion to double and then call the _sin function.

$ nano floatsin.cpp

#include <cmath>

int main(void)

{

float arg = 1.0;

float result = sin(arg);

return 0;

}

$ g++ -S floatsin.cpp

$ more floatsin.s

_main:

LFB127:

pushq %rbp

LCFI0:

movq %rsp, %rbp

LCFI1:

subq $16, %rsp

LCFI2:

movl $0x3f800000, %eax

movl %eax, -4(%rbp)

cvtss2sd -4(%rbp), %xmm0

call _sin

cvtsd2ss %xmm0, %xmm0

movss %xmm0, -8(%rbp)

movl $0, %eax

leave

ret

Updated over 10 years ago by Knödlseder Jürgen

The only absolutely necessary configuration step is identifying yourself and your contact info:

$ git config --global user.name "Joe Public" $ git config --global user.email "joe.public@gmail.com"

Please make sure that you specify your full name as user.name, do not use your abbreviated login name because this makes code modification tracking more cryptic.

In case that you get

error: SSL certificate problem, verify that the CA cert is OK.you may add

$ export GIT_SSL_NO_VERIFY=truebefore retrieving the code. Alternatively, you may type

$ git config --global http.sslverify "false"which disables certificate verification globally for your Git installation.

Updated over 11 years ago by Knödlseder Jürgen

This page summarizes information about the Continuous Integration strategy and procedures that have been implemented for GammaLib.

Continuous integration (CI) is performed in a number of pipelines, each one consisting of a number of integration steps (such as building, testing, documenting, etc.) that will be executed consecutively. Each pipeline step is executed independently of the success of the preceding step. If an integration step fails, a notification is sent.

Below a summary of the integration pipelines that have been implemented. All CI pipelines run on the devel branch of the Git repository. Pipelines are either triggered manually or automatically.

| Pipeline | Trigger | Task |

| OS | nightly (00:00) | Validation on various operating systems |

| Compiler | nightly (02:00) | Validation using various compilers (i386 and x86_64 architectures) |

| Python | nightly (04:00) | Validation using various Python versions (i386 and x86_64 architectures, gcc and clang compilers) |

| swig | manually | Validation using various swig versions (for Python 2 and Python 3) |

The Operating System (OS) pipeline performs a CI of GammaLib on various operating systems. For the Linux system, a large number of distributions is tested. In addition to Linux, BSD and Solaris are tested.

The pipeline consists of 3 steps for GammaLib: building (make), unit testing (make check), and creation of the Doxygen documentation (make doxygen).

After the documentation step, additional steps are added for dependent projects (for the moment there is a build and a unit testing step for ctools, resulting in a 5 step OS pipeline).

The compiler pipeline performs a CI of GammaLib using different compiler types and versions. For the moment, gcc and clang are tested. gcc is tested from version 3.2.x on up to the most recent version, clang is tested for version 3.1). The tests are performed on the i386 and x86_64 architectures.

The pipeline consists of 2 steps for GammaLib: building (make) and unit testing (make check).

After the unit testing step, additional steps are added for dependent projects (for the moment there is a build and a unit testing step for ctools, resulting in a 4 step compiler pipeline).

The Python pipeline performs a CI of GammaLib using different Python versions. For the moment, Python 2.7.3 and Python 3.2.3 are tested. The tests are performed on the i386 and x86_64 architectures and using the gcc 4.7.0 and clang 3.1 compilers.

The pipeline consists of 2 steps for GammaLib: building (make) and unit testing (make check).

After the unit testing step, additional steps are added for dependent projects (for the moment there is a build and a unit testing step for ctools, resulting in a 4 step Python pipeline).

The swig pipeline performs a CI of GammaLib using different swig versions. The tests are performed using Python 2.7.3 and Python 3.2.3.

The pipeline consists of 2 steps for GammaLib: building (make) and unit testing (make check).

After the unit testing step, additional steps are added for dependent projects (for the moment there is a build and a unit testing step for ctools, resulting in a 4 step swig pipeline).

Updated about 3 years ago by Knödlseder Jürgen

This page explains how you can contribute to the development of the GammaLib library.

If some of the software is not yet installed on your system, it is very likely that you can install it through your system’s package manager. On Mac OS X, you may use the Homebrew or MacPorts package managers. Make sure that you install the development packages of cfitsio, Python and readline as they provide the header files that are required for compilation.

GammaLib uses Git for version control.

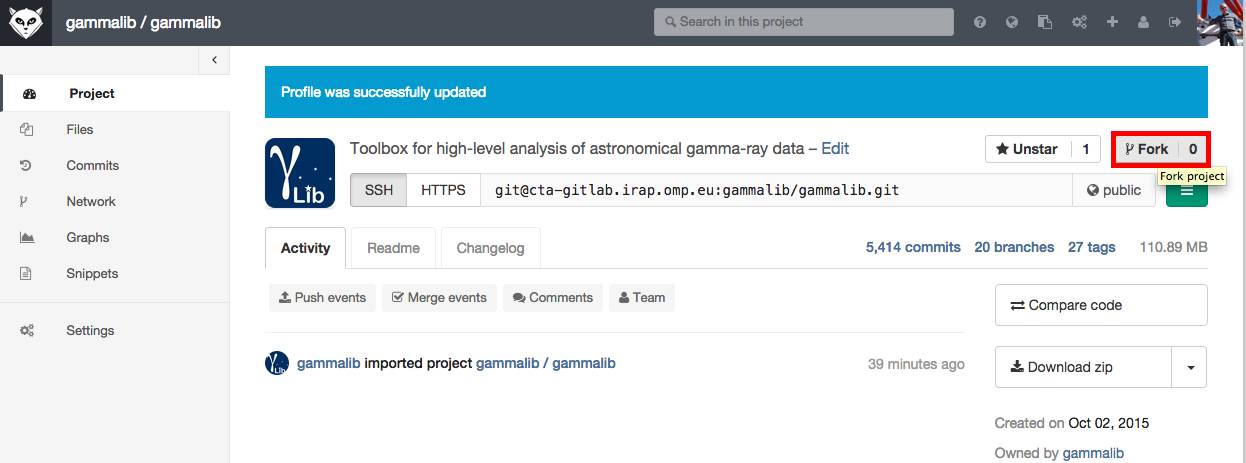

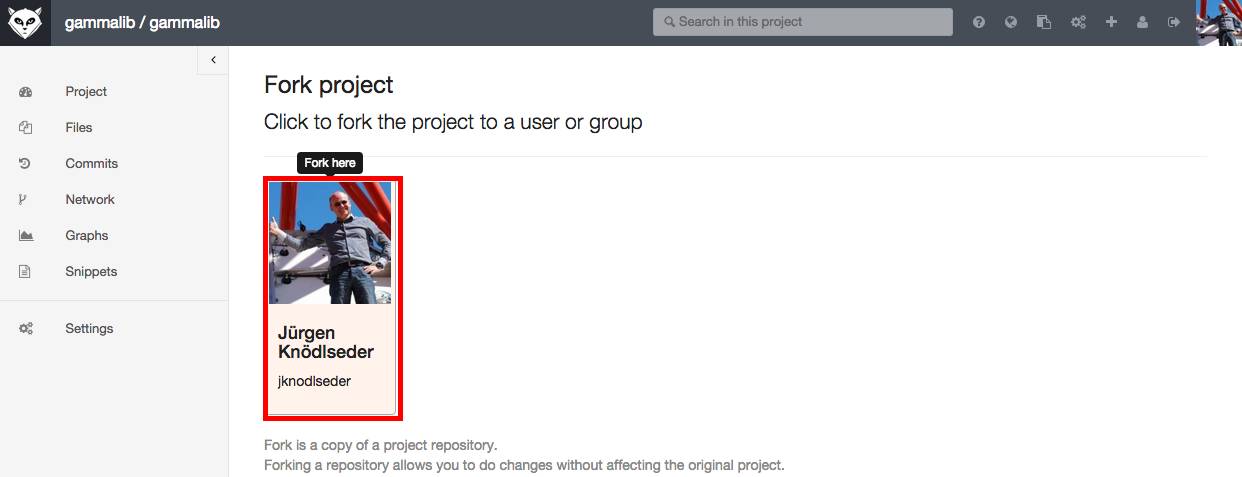

To learn how Git is used within the GammaLib project, please familiarize yourself with the Git workflow.

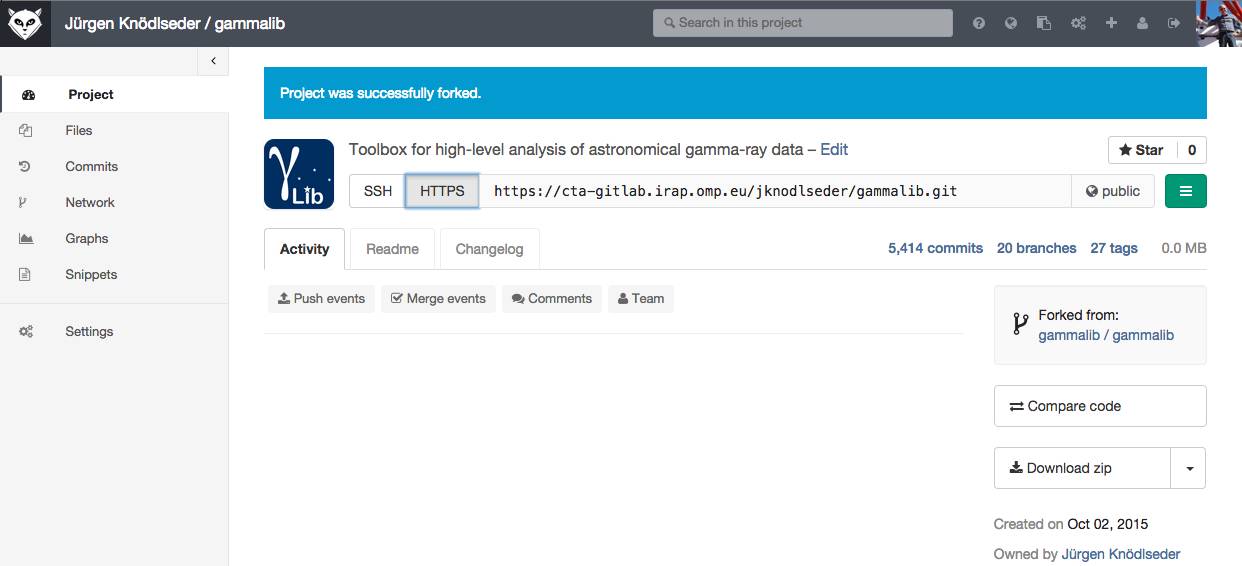

The central GammaLib Git repository can be found at https://cta-gitlab.irap.omp.eu/gammalib/gammalib.git. A mirror of the central repository is available on GitHub at https://github.com/gammalib/gammalib. Both repositories are read-only, and are accessed using the https protocol. Both on Gitlab and GitHub you may fork the GammaLib repository and develop your code using this fork (read Git workflow to learn how).

The default branch from which you should start your software development is the devel branch. devel is GammaLib’s trunk. The command

$ git clone https://cta-gitlab.irap.omp.eu/gammalib/gammalib.gitwill automatically clone this branch from the central GammaLib Git repository.

Software developments are done in feature branches. When the development is finished, issue a pull request so that the feature branch gets merged into devel. Merging is done by the GammaLib integration manager.

After cloning GammaLib (see above) you will find a directory called gammalib. To learn more about the structure of this directory read GammaLib directory structure. Before this directory can be used for development, we have to prepare it for configuration. If you’re not familiar with the autotools, please read this section carefully so that you get the big picture.

Step into the gammalib directory and prepare for configuration by typing

$ cd gammalib $ ./autogen.sh

glibtoolize: putting auxiliary files in `.'. glibtoolize: copying file `./ltmain.sh' glibtoolize: putting macros in AC_CONFIG_MACRO_DIR, `m4'. glibtoolize: copying file `m4/libtool.m4' glibtoolize: copying file `m4/ltoptions.m4' glibtoolize: copying file `m4/ltsugar.m4' glibtoolize: copying file `m4/ltversion.m4' glibtoolize: copying file `m4/lt~obsolete.m4' configure.ac:74: installing './config.guess' configure.ac:74: installing './config.sub' configure.ac:35: installing './install-sh' configure.ac:35: installing './missing'

glibtoolize you may see libtoolize on some systems. Also, some systems may produce a more verbose output.

The autogen.sh script invokes the following commands:

glibtoolize or libtoolizeaclocalautoconfautoheaderautomakeglibtoolize or libtoolize installs files that are relevant for the libtool utility. Those are ltmain.sh under the main directory and a couple of files under the m4 directory (see the above output).

aclocal scans the configure.ac script and generates the files aclocal.m4 and autom4te.cache. The file aclocal.m4 contains a number of macros that will be used by the configure script later.

autoconf scans the configure.ac script and generates the file configure. The configure script will be used later for configuring of GammaLib (see below).

autoheader scans the configure.ac script and generates the file config.h.in.

automake scans the configure.ac script and generates the files Makefile.in, config.guess, config.sub, install-sh, and missing. Note that the files Makefile.in will generated in all subdirectories that contain a file Makefile.am.

There is a single command to configure GammaLib:

$ ./configure

configure is a script that has been generated previously by the autoconf step. A typical output of the configuration step is provided in the file attachment:configure.out.

By the end of the configuration step, you will see the following sequence

config.status: creating Makefile config.status: creating src/Makefile config.status: creating src/support/Makefile config.status: creating src/linalg/Makefile config.status: creating src/numerics/Makefile config.status: creating src/fits/Makefile config.status: creating src/xml/Makefile config.status: creating src/sky/Makefile config.status: creating src/opt/Makefile config.status: creating src/obs/Makefile config.status: creating src/model/Makefile config.status: creating src/app/Makefile config.status: creating src/test/Makefile config.status: creating src/gammalib-setup config.status: WARNING: 'src/gammalib-setup.in' seems to ignore the --datarootdir setting config.status: creating include/Makefile config.status: creating test/Makefile config.status: creating pyext/Makefile config.status: creating pyext/setup.py config.status: creating gammalib.pc config.status: creating inst/Makefile config.status: creating inst/mwl/Makefile config.status: creating inst/cta/Makefile config.status: creating inst/lat/Makefile config.status: creating config.hHere, each file with an suffix

.in will be converted into a file without suffix. For example, Makefile.in will become Makefile, or setup.py.in will become setup.py. In this step, variables (such as path names, etc.) will be replaced by their absolute values.

This sequence will also produce a header file named config.h that is stored in the gammalib root directory. This header file will contain a number of compiler definitions that can be used later in the code to adapt to the specific environment. Recall that config.h.in has been created using autoheader by scanning the configure.ac file, hence the specification of which compiler definitions will be available is ultimately done in configure.ac.

The configure script will end with a summary about the detected configuration. Here a typical example:

GammaLib configuration summary ============================== * FITS I/O support (yes) /usr/local/gamma/lib /usr/local/gamma/include * Readline support (yes) /usr/local/gamma/lib /usr/local/gamma/include/readline * Ncurses support (yes) * Make Python binding (yes) use swig for building * Python (yes) * Python.h (yes) - Python wrappers (no) * swig (yes) * Multiwavelength interface (yes) * Fermi-LAT interface (yes) * CTA interface (yes) * Doxygen (yes) /opt/local/bin/doxygen * Perform NaN/Inf checks (yes) (default) * Perform range checking (yes) (default) * Optimize memory usage (yes) (default) * Enable OpenMP (yes) (default) - Compile in debug code (no) (default) - Enable code for profiling (no) (default)The

configure script tests for the presence of required libraries, checks if Python is available, analysis whether swig is needed to build Python wrappers, signals the presence of instrument modules, and handles compile options. To learn more about available options, type$ ./configure --help

GammaLib is compiled by typing

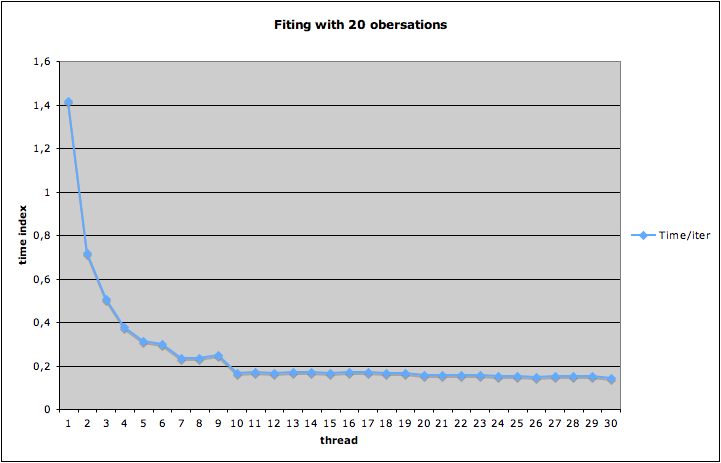

$ makeIn case you develop GammaLib on a multi-processor and/or multi-core machine, you may accelerate compilation by specifying the number of threads after the

-j argument, e.g.$ make -j10uses 10 parallel threads at maximum for compilation.

gmake, which on most systems is in fact aliased to make. If this is not the case on your system, use gmake explicitely. (gmake is required due to some of the instructions in pyext/Makefile.am).

When compiling GammaLib, one should be aware of the way how dependency tracking has been setup. Dependency tracking is a system that determines which files need to be recompiled after modifications. For standard dependency tracking, the compiler preprocessor determines all files on which a given file depends on. If one of the dependencies changes, the file will be recompiled. The problem with the standard dependency tracking is that it always compiles a lot of files, which would make the GammaLib development cycle very slow. For this reason, standard dependency tracking has been disabled. This has been done by adding no-dependencies to the options for the AM_INIT_AUTOMAKE macro in configure.ac. If you prefer to enable standard dependency tracking, it is sufficient to remove no-dependencies from the options. After removal, run

$ autoconf $ ./configure

Without standard dependency tracking, the following logic applies:

.cpp) that have been modified will be recompiled when make is invoked. make uses the modification date of a file to determine if recompilation is needed. Thus, to enforce recompilation of a file it is sufficient to update its modification date using touch, e.g.touch src/app/GPars.cppenforces recompilation of the

GPars.cpp file and rebuilding of the GammaLib library..hpp) that have been modified will not lead to recompilation. This is a major hurdle, and if you think that a modification of a header file affects largely the system, it is recommended to make a full build using$ make clean $ makeVery often, however, only the corresponding source file is affected, and it is sufficient to

touch the source file to enforce its recompilation..i) that have been modified will lead to a rebuilding of the Python interface. In fact, dependency tracking has been enabled for the SWIG files. In case you want to rebuild the entire Python interface, execute the following command sequence$ cd pyext $ make clean $ cd .. $ make

When a Git branch is changed, the files that differ will have different modification dates, hence recompilation will take place. However, as mentioned above, header file (.hpp) changes will not be tracked, which could lead to a corrupt system. It is thus recommended to recompile the full library after a change of the Git branch using

$ make clean $ make

The GammaLib unit test suite is run using

$ make checkIn case that the unit test code has not been compiled so far (or if the code has been changed), compilation is performed before testing. Full dependency tracking is implemented for the C++ unit tests, hence all GammaLib library files that have been modified will be recompiled, the library will be rebuilt, and unit tests will be recompiled (in needed) before executing the test. However, full dependency tracking is still missing for the Python interface, hence one should make sure that the Python interface is recompiled before executing the unit test (see above).

A successful unit test will terminate with

=================== All 20 tests passed ===================For each of the unit tests, a test report in JUnit compliant XML format will be written into the

gammalib/test/reports directory. These reports can be analyzed by any standard tools (e.g. Sonar).

To execute only a subset of the unit tests, type the following

$ env TESTS="test_python.sh ../inst/cta/test/test_CTA" make -e checkThis will execute the Python and the CTA unit test. You may add any combination of tests in the string.

If you’d like to repeat the subset of unit test it is sufficient to type

$ make -e checkas

TESTS is still set. To unset TESTS, type$ unset TESTS

As GammaLib developments should be test driven, the GammaLib unit tests are an essential tool for debugging the code. If some unit test fails for an unknown reason, the gdb debugger can be used for analysis as follows. Each test executable (e.g. test_CTA) is in fact a script that properly sets the environment and then executes the test binary. Opening the test script in an editor will reveal the place where the test executable is called:

$ nano test_CTA

...

# Core function for launching the target application

func_exec_program_core ()

{

if test -n "$lt_option_debug"; then

$ECHO "test_CTA:test_CTA:${LINENO}: newargv[0]: $progdir/$program" 1>&2

func_lt_dump_args ${1+"$@"} 1>&2

fi

exec "$progdir/$program" ${1+"$@"}

$ECHO "$0: cannot exec $program $*" 1>&2

exit 1

}

...

It is now sufficient to replace exec by gdb, i.e.

$ nano test_CTA

...

# Core function for launching the target application

func_exec_program_core ()

{

if test -n "$lt_option_debug"; then

$ECHO "test_CTA:test_CTA:${LINENO}: newargv[0]: $progdir/$program" 1>&2

func_lt_dump_args ${1+"$@"} 1>&2

fi

gdb "$progdir/$program" ${1+"$@"}

$ECHO "$0: cannot exec $program $*" 1>&2

exit 1

}

...

Now, invoking test_CTA will launch the debugger:$ ./test_CTA GNU gdb 6.3.50-20050815 (Apple version gdb-1515) (Sat Jan 15 08:33:48 UTC 2011) Copyright 2004 Free Software Foundation, Inc. GDB is free software, covered by the GNU General Public License, and you are welcome to change it and/or distribute copies of it under certain conditions. Type "show copying" to see the conditions. There is absolutely no warranty for GDB. Type "show warranty" for details. This GDB was configured as "x86_64-apple-darwin"...Reading symbols for shared libraries ....... done (gdb) run Starting program: /Users/jurgen/git/gammalib/test/.libs/test_CTA Reading symbols for shared libraries .++++++. done ***************************************** * CTA instrument specific class testing * ***************************************** Test response: .. ok Test effective area: .. ok Test PSF: ........ ok Test integrated PSF: ..... ok Test diffuse IRF: .. ok Test diffuse IRF integration: .. ok Test unbinned observations: ...... ok Test binned observation: ... ok Test unbinned optimizer: ......................... ok Test binned optimizer: ......................... ok Program exited normally. (gdb) quit ./test_CTA: cannot exec test_CTA

Have a look e.g. at test_GSky.py on how to write Python unit tests in gammalib.

Note that when you run your unit test via test_python.py, your Python code is actually run in a way that makes it impossible to debug your code. print statements will not print to the console and Python exceptions will abort your test, but not show up on the console or the GPython.xml unit test log file.

To write Python unit tests, you should first develop them in independent scripts or in the IPython console or notebook, and only when they are debugged, copy them into the gammalib test file, and add the GPythonTestSuite assert statements at the end of your test.

One possibility to debug Python in general is to add this line before the code you want to debug:

import IPython; IPython.embed()

This will drop you in an IPython interactive session, with the state (stack, variables) as it is in your Python script, and you can inspect variables or copy & paste the following statements from your script to see what is going on.

If you would like to use GammaLib e.g. from standalone C++ programs or Python scripts or from ctools,

the procedure described above will not work.

You have to execute the additional make install step and source the gammalib-init.sh setup file so that you’ll actually use the new version of GammaLib:

$ export GAMMALIB=<wherever you want> $ ./configure --prefix=$GAMMALIB $ make install $ source $GAMMALIB/bin/gammalib-init.sh

If you are unsure which version of GammaLib you are using you can use $GAMMALIB or pkg-config to find out:

$ echo $GAMMALIB $ ls -lh $GAMMALIB/lib/libgamma.* $ pkg-config --libs gammalib

Look at the modification date of the libgamma.so file (libgamma.dylib on Mac) to check if it’s the one that contains your latest changes.

To be written.

To be written.

To be written.

Updated over 8 years ago by Knödlseder Jürgen

This page explains how you can contribute to the development of the GammaLib library.

If some of the software is not yet installed on your system, it is very likely that you can install it through your system’s package manager. Make sure that you install the development packages of cfitsio, Python and readline as they provide the header files that are required for compilation. Alternatively, you may install the software from source. On Mac OS X, you may use the MacPorts system.

GammaLib uses Git for version control. To learn how Git is used within the GammaLib project, please familiarize yourself with the Git workflow for GammaLib.

The central GammaLib Git repository can be found at https://cta-git.irap.omp.eu/gammalib. Access to the repository is managed by the https protocol. The repository is publicly accessible for reading. Pushing (i.e. writing) requires password authentification.

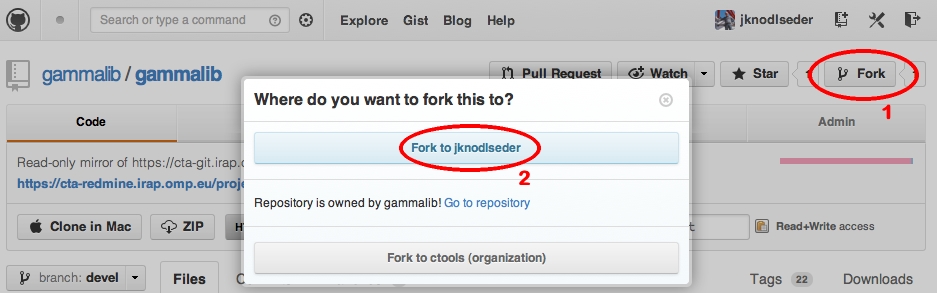

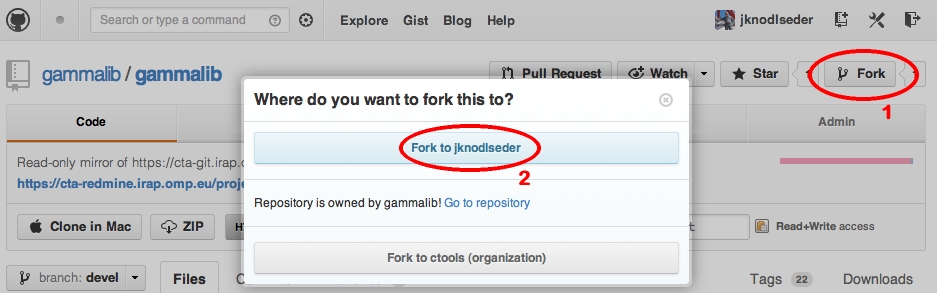

GammaLib is also accessible on GitHub at the address https://github.com/gammalib/gammalib. The GitHub repository is a mirror of the central GammaLib Git repository, and is read-only. You may fork the GammaLib repository on GitHub and develop your code using this fork (read Git workflow for GammaLib to learn how).

master: source code of last public code releasedevel: central development branch (trunk)release: branch used to stabilize the code prior to a new releaseintegration: branch used for code integrationPushing to these branches is prohibited. Only the integration and release manager(s) will write into these branches.

In addition, temporary feature or hotfix branches may exist.

The default branch from which you should start your software development is the devel branch. devel is GammaLib’s trunk. The command

$ git clone https://cta-git.irap.omp.eu/gammalibwill automatically clone this branch from the central GammaLib Git repository.

Software developments are done in feature branches. When the development is finished, issue a pull request so that the feature branch gets merged into devel. Merging is done by the GammaLib integration manager.

After cloning GammaLib (see above) you will find a directory called gammalib. To learn more about the structure of this directory read GammaLib directory structure.

Before this directory can be used for development, we have to prepare it for configuration. If you’re not familiar with the autotools, please read this section carefully so that you get the big picture.

Step into the gammalib directory and prepare for configuration by typing

$ cd gammalib $ ./autogen.sh

glibtoolize: putting auxiliary files in `.'. glibtoolize: copying file `./ltmain.sh' glibtoolize: putting macros in AC_CONFIG_MACRO_DIR, `m4'. glibtoolize: copying file `m4/libtool.m4' glibtoolize: copying file `m4/ltoptions.m4' glibtoolize: copying file `m4/ltsugar.m4' glibtoolize: copying file `m4/ltversion.m4' glibtoolize: copying file `m4/lt~obsolete.m4' configure.ac:74: installing './config.guess' configure.ac:74: installing './config.sub' configure.ac:35: installing './install-sh' configure.ac:35: installing './missing'

glibtoolize you may see libtoolize on some systems. Also, some systems may produce a more verbose output.

The autogen.sh script invokes the following commands:

glibtoolize or libtoolizeaclocalautoconfautoheaderautomakeglibtoolize or libtoolize installs files that are relevant for the libtool utility. Those are ltmain.sh under the main directory and a couple of files under the m4 directory (see the above output).

aclocal scans the configure.ac script and generates the files aclocal.m4 and autom4te.cache. The file aclocal.m4 contains a number of macros that will be used by the configure script later.

autoconf scans the configure.ac script and generates the file configure. The configure script will be used later for configuring of GammaLib (see below).

autoheader scans the configure.ac script and generates the file config.h.in.

automake scans the configure.ac script and generates the files Makefile.in, config.guess, config.sub, install-sh, and missing. Note that the files Makefile.in will generated in all subdirectories that contain a file Makefile.am.

There is a single command to configure GammaLib:

$ ./configure

configure is a script that has been generated previously by the autoconf step. A typical output of the configuration step is provided in the file configure.out.

By the end of the configuration step, you will see the following sequence

config.status: creating Makefile config.status: creating src/Makefile config.status: creating src/support/Makefile config.status: creating src/linalg/Makefile config.status: creating src/numerics/Makefile config.status: creating src/fits/Makefile config.status: creating src/xml/Makefile config.status: creating src/sky/Makefile config.status: creating src/opt/Makefile config.status: creating src/obs/Makefile config.status: creating src/model/Makefile config.status: creating src/app/Makefile config.status: creating src/test/Makefile config.status: creating src/gammalib-setup config.status: WARNING: 'src/gammalib-setup.in' seems to ignore the --datarootdir setting config.status: creating include/Makefile config.status: creating test/Makefile config.status: creating pyext/Makefile config.status: creating pyext/setup.py config.status: creating gammalib.pc config.status: creating inst/Makefile config.status: creating inst/mwl/Makefile config.status: creating inst/cta/Makefile config.status: creating inst/lat/Makefile config.status: creating config.hHere, each file with an suffix

.in will be converted into a file without suffix. For example, Makefile.in will become Makefile, or setup.py.in will become setup.py. In this step, variables (such as path names, etc.) will be replaced by their absolute values.

This sequence will also produce a header file named config.h that is stored in the gammalib root directory. This header file will contain a number of compiler definitions that can be used later in the code to adapt to the specific environment. Recall that config.h.in has been created using autoheader by scanning the configure.ac file, hence the specification of which compiler definitions will be available is ultimately done in configure.ac.

The configure script will end with a summary about the detected configuration. Here a typical example:

GammaLib configuration summary ============================== * FITS I/O support (yes) /usr/local/gamma/lib /usr/local/gamma/include * Readline support (yes) /usr/local/gamma/lib /usr/local/gamma/include/readline * Ncurses support (yes) * Make Python binding (yes) use swig for building * Python (yes) * Python.h (yes) - Python wrappers (no) * swig (yes) * Multiwavelength interface (yes) * Fermi-LAT interface (yes) * CTA interface (yes) * Doxygen (yes) /opt/local/bin/doxygen * Perform NaN/Inf checks (yes) (default) * Perform range checking (yes) (default) * Optimize memory usage (yes) (default) * Enable OpenMP (yes) (default) - Compile in debug code (no) (default) - Enable code for profiling (no) (default)The

configure script tests for the presence of required libraries, checks if Python is available, analysis whether swig is needed to build Python wrappers, signals the presence of instrument modules, and handles compile options. To learn more about available options, type$ ./configure --help

GammaLib is compiled by typing

$ makeIn case you develop GammaLib on a multi-processor and/or multi-core machine, you may accelerate compilation by specifying the number of threads after the

-j argument, e.g.$ make -j10uses 10 parallel threads at maximum for compilation.

gmake, which on most systems is in fact aliased to make. If this is not the case on your system, use gmake explicitely. (gmake is required due to some of the instructions in pyext/Makefile.am).

When compiling GammaLib, one should be aware of the way how dependency tracking has been setup. Dependency tracking is a system that determines which files need to be recompiled after modifications. For standard dependency tracking, the compiler preprocessor determines all files on which a given file depends on. If one of the dependencies changes, the file will be recompiled. The problem with the standard dependency tracking is that it always compiles a lot of files, which would make the GammaLib development cycle very slow. For this reason, standard dependency tracking has been disabled. This has been done by adding no-dependencies to the options for the AM_INIT_AUTOMAKE macro in configure.ac. If you prefer to enable standard dependency tracking, it is sufficient to remove no-dependencies from the options. After removal, run

$ autoconf $ ./configure

Without standard dependency tracking, the following logic applies:

.cpp) that have been modified will be recompiled when make is invoked. make uses the modification date of a file to determine if recompilation is needed. Thus, to enforce recompilation of a file it is sufficient to update its modification date using touch, e.g.touch src/app/GPars.cppenforces recompilation of the

GPars.cpp file and rebuilding of the GammaLib library..hpp) that have been modified will not lead to recompilation. This is a major hurdle, and if you think that a modification of a header file affects largely the system, it is recommended to make a full build using$ make clean $ makeVery often, however, only the corresponding source file is affected, and it is sufficient to

touch the source file to enforce its recompilation..i) that have been modified will lead to a rebuilding of the Python interface. In fact, dependency tracking has been enabled for the SWIG files. In case you want to rebuild the entire Python interface, execute the following command sequence$ cd pyext $ make clean $ cd .. $ make

When a Git branch is changed, the files that differ will have different modification dates, hence recompilation will take place. However, as mentioned above, header file (.hpp) changes will not be tracked, which could lead to a corrupt system. It is thus recommended to recompile the full library after a change of the Git branch using

$ make clean $ make

The GammaLib unit test suite is run using

$ make checkIn case that the unit test code has not been compiled so far (or if the code has been changed), compilation is performed before testing. Full dependency tracking is implemented for the C++ unit tests, hence all GammaLib library files that have been modified will be recompiled, the library will be rebuilt, and unit tests will be recompiled (in needed) before executing the test. However, full dependency tracking is still missing for the Python interface, hence one should make sure that the Python interface is recompiled before executing the unit test (see above).

A successful unit test will terminate with

=================== All 20 tests passed ===================For each of the unit tests, a test report in JUnit compliant XML format will be written into the

gammalib/test/reports directory. These reports can be analyzed by any standard tools (e.g. Sonar).

To execute only a subset of the unit tests, type the following

$ env TESTS="test_python.py test_CTA" make -e checkThis will execute the Python and the CTA unit test. You may add any combination of tests in the string.

If you’d like to repeat the subset of unit test it is sufficient to type

$ make -e checkas

TESTS is still set. To unset TESTS, type$ unset TESTS

As GammaLib developments should be test driven, the GammaLib unit tests are an essential tool for debugging the code. If some unit test fails for an unknown reason, the gdb debugger can be used for analysis as follows. Each test executable (e.g. test_CTA) is in fact a script that properly sets the environment and then executes the test binary. Opening the test script in an editor will reveal the place where the test executable is called:

$ nano test_CTA

...

# Core function for launching the target application

func_exec_program_core ()

{

if test -n "$lt_option_debug"; then

$ECHO "test_CTA:test_CTA:${LINENO}: newargv[0]: $progdir/$program" 1>&2

func_lt_dump_args ${1+"$@"} 1>&2

fi

exec "$progdir/$program" ${1+"$@"}

$ECHO "$0: cannot exec $program $*" 1>&2

exit 1

}

...

It is now sufficient to replace exec by gdb, i.e.

$ nano test_CTA

...

# Core function for launching the target application

func_exec_program_core ()

{

if test -n "$lt_option_debug"; then

$ECHO "test_CTA:test_CTA:${LINENO}: newargv[0]: $progdir/$program" 1>&2

func_lt_dump_args ${1+"$@"} 1>&2

fi

gdb "$progdir/$program" ${1+"$@"}

$ECHO "$0: cannot exec $program $*" 1>&2

exit 1

}

...

Now, invoking test_CTA will launch the debugger:$ ./test_CTA GNU gdb 6.3.50-20050815 (Apple version gdb-1515) (Sat Jan 15 08:33:48 UTC 2011) Copyright 2004 Free Software Foundation, Inc. GDB is free software, covered by the GNU General Public License, and you are welcome to change it and/or distribute copies of it under certain conditions. Type "show copying" to see the conditions. There is absolutely no warranty for GDB. Type "show warranty" for details. This GDB was configured as "x86_64-apple-darwin"...Reading symbols for shared libraries ....... done (gdb) run Starting program: /Users/jurgen/git/gammalib/test/.libs/test_CTA Reading symbols for shared libraries .++++++. done ***************************************** * CTA instrument specific class testing * ***************************************** Test response: .. ok Test effective area: .. ok Test PSF: ........ ok Test integrated PSF: ..... ok Test diffuse IRF: .. ok Test diffuse IRF integration: .. ok Test unbinned observations: ...... ok Test binned observation: ... ok Test unbinned optimizer: ......................... ok Test binned optimizer: ......................... ok Program exited normally. (gdb) quit ./test_CTA: cannot exec test_CTA

Have a look e.g. at test_GSky.py on how to write Python unit tests in gammalib.

Note that when you run your unit test via test_python.py, your Python code is actually run in a way that makes it impossible to debug your code. print statements will not print to the console and Python exceptions will abort your test, but not show up on the console or the GPython.xml unit test log file.

To write Python unit tests, you should first develop them in independent scripts or in the IPython console or notebook, and only when they are debugged, copy them into the gammalib test file, and add the GPythonTestSuite assert statements at the end of your test.

One possibility to debug Python in general is to add this line before the code you want to debug:

import IPython; IPython.embed()

This will drop you in an IPython interactive session, with the state (stack, variables) as it is in your Python script, and you can inspect variables or copy & paste the following statements from your script to see what is going on.

If you would like to use GammaLib e.g. from standalone C++ programs or Python scripts or from ctools,

the procedure described above will not work.

You have to execute the additional make install step and source the gammalib-init.sh setup file so that you’ll actually use the new version of GammaLib:

$ export GAMMALIB=<wherever you want> $ ./configure --prefix=$GAMMALIB $ make install $ source $GAMMALIB/bin/gammalib-init.sh

If you are unsure which version of GammaLib you are using you can use $GAMMALIB or pkg-config to find out:

$ echo $GAMMALIB $ ls -lh $GAMMALIB/lib/libgamma.* $ pkg-config --libs gammalib

Look at the modification date of the libgamma.so file (libgamma.dylib on Mac) to check if it’s the one that contains your latest changes.

To be written.

To be written.

To be written.

Updated over 2 years ago by Knödlseder Jürgen

This page summarises a couple of thoughts on a possible COSI instrument interface

There are different event types that need to be handled by the interface, including Compton events, pair events, photo effect events, muon events and unidentified events.

For each of the event types a specific GCOSEventList and GCOSEventAtom class needs to be implemented to cope with the different data structures of the event types. If needed, GCOSEventList and GCOSEventAtom base classes may be implemented for common services. For example, GCOSEventList could handle the generic reading of tra and fits files. For convenience, reading of both file types should be supported.

Each event type would then be handled by a specific instance of GCOSObservation. While the GCOSObservation will probably be generic for any kind of COSI event type, it will hold the specific GCOSEventList class that corresponds to a single event type.

Since the response for a given event type will be quite specific, separate response classes for specific event types will be implemented, possibly derived from a GCOSResponse base class.

Therefore we expect that the following classes will be implemented ultimately:

GCOSObservation GCOSEventList +- GCOSComptonEvents +- GCOSPairEvents +- GCOSPhotoEvents +- GCOSMuonEvents +- GCOSUnidEvents GCOSEventAtom +- GCOSComptonEvent +- GCOSPairEvent +- GCOSPhotoEvent +- GCOSMuonEvent +- GCOSUnidEvent GCOSResponse +- GCOSComptonResponse +- GCOSPairResponse +- GCOSPhotoResponse +- GCOSMuonResponse +- GCOSUnidResponseIt remains to be seen whether actually all response classes need to be implemented, as not all event types will be used for science.

Some functionality should be implemented to define ranges for the number of hits for GCOSComptonEvents and the associated GCOSComptonResponse. This will allow to handle different hit numbers as different observations, combining all information in a joint maximum likelihood analysis.

Updated over 9 years ago by Knödlseder Jürgen

Updated over 9 years ago by Knödlseder Jürgen

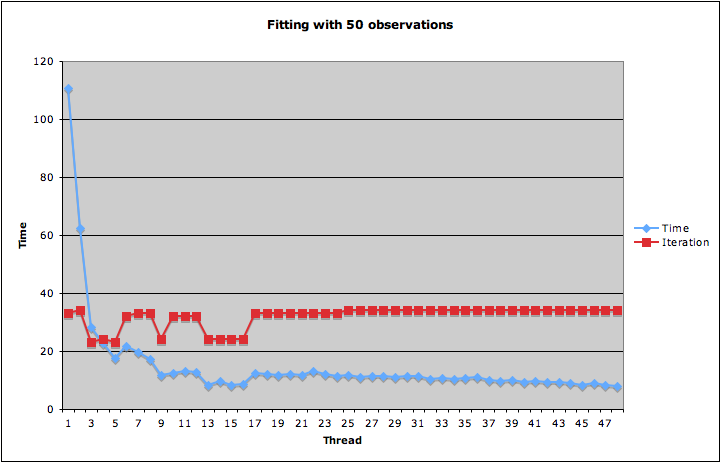

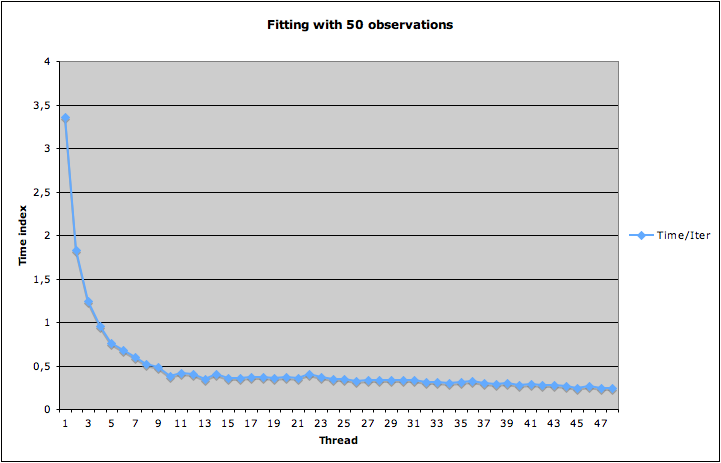

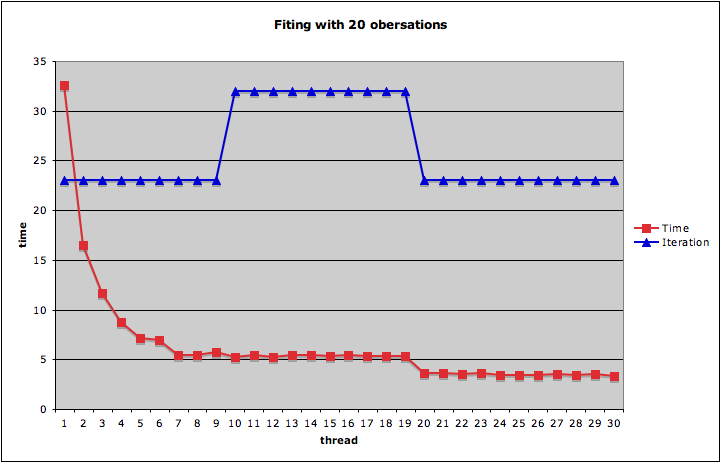

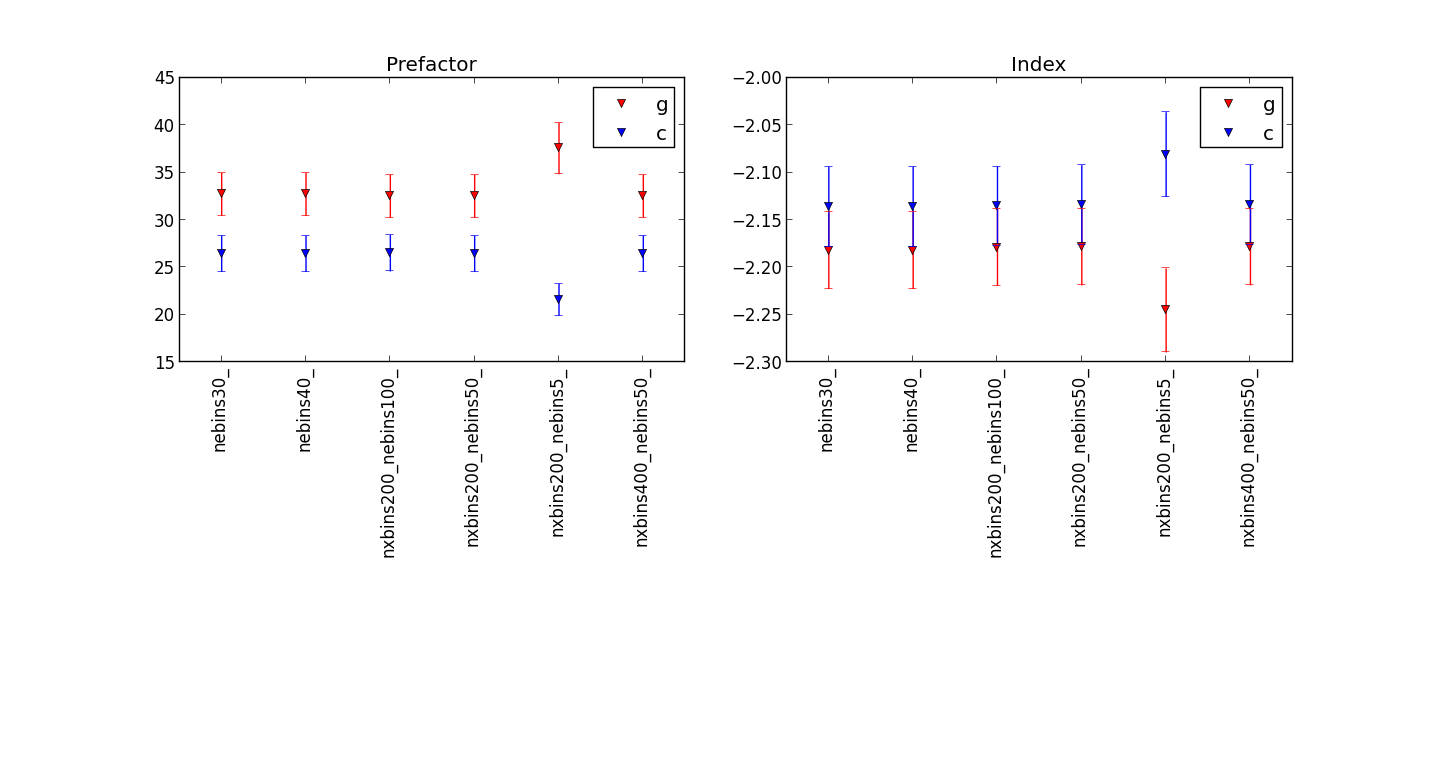

benchmark_ml_fitting.py that can be found in the inst/cta/test directory. The script needs to be run in that directory once GammaLib has been installed. It uses test data that are shipped together with the GammaLib package and that serve as a reference. Test data exist for different simulated spatial models, all test data share the following characteristics:

cta_dummy_irf response function (performance table)The following table summarizes the benchmarks results for unbinned, binned and stacked analysis. The response computation differs for these three analysis methods, and consequently, computing times are not identical. Note that the executing time for unbinned analysis scales roughly linearly with observing time (or to be precise with the number of events), while the executing time for stacked analysis is roughly independent of observing time. Execution time for binned analysis scales with the number of individual observations (or runs) that are analysed. Each table cell shows the computing time (i.e. CPU time), the number of fitting iterations needed, the log-likelihood value, and the fitted source parameters given in the order they appear in the model.

The benchmark has been obtained on 30 October 2014 on kepler (CentOS 5, x86_64, AMD Opteron 6164 HE, 1.7 GHz). OpenMP support has been disabled to ensure accurate timing.

| Model | Unbinned | Binned | Stacked |

| Point | 0.14 sec (3, 33105.355, 6.037e-16, -2.496) | 14.2 sec (3, 17179.086, 5.993e-16, -2.492) | 9.1 sec (3, 17179.082, 5.992e-16, -2.492) |

| Disk | 31.4 sec (3, 34176.549, 83.633, 22.014, 0.201, 5.456e-16, -2.459) | 252.0 sec (3, 18452.537, 83.632, 22.014, 0.202, 5.394e-16, -2.456) | 127.1 sec (3, 18452.503, 83.632, 22.014, 0.202, 5.418e-16, -2.457) |

| Gauss | 36.4 sec (3, 35059.430, 83.626, 22.014, 0.204, 5.374e-16, -2.468) | 1539.5 sec (3, 19327.126, 83.626, 22.014, 0.205, 5.346e-16, -2.468) | 1237.2 sec (3, 19327.267, 83.626, 22.014, 0.205, 5.368e-16, -2.473) |

| Shell | 70.5 sec (3, 35261.037, 83.633, 22.020, 0.286, 0.115, 5.816e-16, -2.449) | 815.6 sec (3, 19301.278, 83.632, 22.019, 0.284, 0.118, 5.771e-16, -2.445) | 526.5 sec (4, 19301.380, 83.633, 22.019, 0.286, 0.117, 5.794e-16, -2.447) |

| Ellipse | 156.9 sec (5, 35363.713, 83.569, 21.956, 44.789, 1.998, 0.472, 5.430e-16, -2.482) | 5754.1 sec (6, 19943.973, 83.572, 21.956, 44.909, 1.988, 0.474, 5.331e-16, -2.473) | 6519.6 sec (6, 19944.471, 83.572, 21.958, 44.927, 2.007, 0.467, 5.314e-16, -2.444) |

| Diffuse | 3.9 sec (18 of which 9 stalled, 32629.409, 5.296e-16, -2.663) | 12639.9 sec (18 of which 10 stalled, 18221.681, 5.681e-16, -2.647) | 295.8 sec (18 of which 10 stalled, 18221.815, 5.624e-16, -2.665) |

Updated about 11 years ago by Deil Christoph

To implement the standard HESS analysis in GammaLib, on-off fitting should be supported. On-off fitting means that the off data are fit simultaneously to the on data. The relevant mathematics can be found in Mathieu_OnOffFitter.pdf. An good reference that has the relevant formulae we can cite in the documentation is Mathieu de Naurois’s habilitation thesis .

For the moment, GammaLib only supports fitting on models without any uncertainties.

The first question is: where should we put the off data.

One natural place would be GCTAObservation, in parallel with the data. We could add a pointer for off events to GCTAObservation. If the pointer is NULL, the original method is used. If the data-cube exists, a modified likelihood formula is used that takes into account the off-data.

We just have to think a little bit more about how we fiddle the off counts normalization factor into all this. Somehow, we have to add the parameter to the model. Maybe we have to add an “off model” to our model container, and then establish a machanism that maps from a off model to a specific off data-cube.

Alternatively, the off-data could simply come with the model. This model would be of type GDataModel. However, it would be a little complicated to have the on data defined in the observation, while the off data are defined in the model.

Maybe a hybrid approach would be the best: having an GCTAModelOff or similar to describe the background, and loading the relevant file into GCTAObservation.

Note, however, that there is some similarity with having an acceptance model (see action #569). Here we want to feed a map cube as background model to the fitter. Again, do we want to have the acceptance model specified in the model, or in the observation.

We probably could solve both by introducing a GCTAModelBackground class that handles map cubes. Note that a map cube could be a spectrum (a sky cube with a single pixel)! We could then load the background model directly in the observation, and handle the rest by the model. Just, the simple logic discussed about (with the NULL pointer) won’t work, as the acceptance model will be exact (is this true?), while the off data will have noise.

Updated almost 11 years ago by Knödlseder Jürgen

This table summarizes the discussion on the response format during the June 2013 code sprint.

| Response component | Axes | Binning |

| Effective area | x, y, log10 energy | 20 x 20 x 50 |

| PSF | x, y, log10 energy, offset or parametrization | 20 x 20 x 50 x (50 or a couple) |

| Energy dispersion | x, y, log10 E_true, log10 E_measured | 20 x 20 x 50 x 50 |

x, y any coordinates? Could be easier in Right Ascension and Declination. Could be TAN projection.

Updated over 11 years ago by Deil Christoph

gammalib and ctools deployment¶Installing gammalib and ctools anywhere as well as updating when new versions come out should be as easy as possible.

One great way is to add gammalib and ctools to the most common Linux and Mac package repositories.

There are many Linux distributions and several package formats are in widespread use.

Fortunately there are tools and services that help packaging libraries and tools for all of them from one template.

I read a bit about a few possibilities and Open Build Service implemented at the openSUSE Build Service seems the best option.

I think it can produce packages for all popular Linux distributions and architectures.

For status see issue #580.

gammalib to all two popular package managers:

There’s also Fink but it’s slowly dying and much less nice than Macports and Homebrew, so I think we should not even put gammalib in there and mention it for end-users (see issue #582).

In addition to deploying the software we might want to make it easy to get instrument IRFs, data and ancillary data (of course requiring a password for private data). Below a few thoughts, if we actually do this we should make wiki sub-pages.

cfitsio:

At the moment the Fermi LAT and CTA IRFs are contained in the gammalib repo. Due to the size, this is not possible for HESS and future CTA IRFs. Instead we should let the user set a CALDB environment variable and then provide a script to download / update the IRFs for data servers.

We should make it easy to download, organize and possibly pre-process data (e.g. for Fermi LAT, HESS, the CTA data challenges) to be ready to run analyses with the ctools.

Like for the IRFs probably letting the user set an environment variable where to put the data and then having a script to download / update / pre-process the data would work quite well.

Ultimately, support for data in the Virtual Observatory format should be implemented in GammaLib, so that data can be directly accessed via virtual observatory protocols. So far, no exploratory work has been done to implement this capability, but it should be the ultimate goal.

E.g. to run a Fermi data analysis you need the diffuse models and catalog. For HESS you need exclusion regions. These required ancillary files to run analyses could be easily deployed conveniently for the end user similar to the IRFs and data. Also here, virtual observatory protocols can be implemented to access these data.

Updated over 9 years ago by Knödlseder Jürgen

This page summarizes guidelines for the GammaLib development. We recommend that you follow these guidelines as closely as possible during your GammaLib developments.